This chapter will discuss a way of hybridising the engines discussed in Chapter One and Two to form an engine termed the Intermittency Engine. In doing so I first summarise the strengths and weaknesses of the engines in the previous chapters. I also present the issue of musical memorability in both the branching and generative techniques used in the other chapters of this paper. I suggest a way to combine the strengths of both branching and generative engines to create a more immersive hybrid music engine. This solution will come in the form of a system that uses a concept of pre-composed islands of music (defined below) amid seas of generative musical personalities (as defined in the previous chapter).

The discussion on the branching and generative music engines above largely illustrated the strengths of each engine. Though some discussion on limitations has taken place, it is necessary to analyse these limitations further to provide a clearer picture on the useful qualities from each, which will then be combined within a third hybrid engine; the focus of this chapter. These qualities pertain to four areas: hardware limitations, success at transitioning between two musics, whether any long-term monotony is present, and whether the music is memorable.

Hardware limitations for a branching music engine or a generative music engine are opposed. In summary of the previous chapters, I stated that serious consideration is needed from the developers of games implementing branching music. This is due to the amounts of raw drive space needed to store all the multiple branches of pre-composed music. For modern devices, such as iPads and iPhones with small standard disk sizes this would be an issue of even greater concern for a game implementing purely branching music. With the amount of pre-composed music needing to be loaded this could even tax a processor. The generative engine, however, is more processor intensive and demands less hard drive space. Intensity here is based only on the comparison between a generative engine and a branching engine because in a game context the processor must perform many other calculations in any given moment, which are far more demanding than those of either music engine. These other calculations include those needed to accurately represent physics on objects or the rendering of 3D models. I stated that these demands are not a significant enough hindrance due to the advent of more advance technologies. These technologies include greater lossless audio compression, such as FLAC, and the increase in the average size of hard drives as well as jumps in processing speed.

Transitioning between two continuous musics was the primary goal of both previously discussed engines. The transitional phase of gameplay is usually short (less that twenty seconds) and so it was only necessary to find a way in which each engine could fill these time-spaces with appropriate music that blended together two, possibly separate, musics. While manipulating different material both engines fundamentally work in similar ways. In both, the programmer has dictated the choices the computer must follow and the computer has executed as per those instructions. The transitions in branching music are given aesthetic validity by the composer, who has designed the music to faithfully portray certain scenarios by means of notated (or similar) score. Transitions in the generative music engine GMGEn are given general constraints by the user. These constraints force the random output from the computer to form particular coherences across multiple hearings due to the principle of invariance defined in the previous chapter. In GMGEn this amounts to a general scoring rather than literal scoring. In this case, transitions in GMGEn are achieved by teaching the program correct ways to adjust particular elements of the score when triggered from within the game. While the two engines perform well during transitional states, there are differing degrees of success achieved when using these engines outside of transitional periods where musical personalities may be static for longer durations (greater than twenty seconds).

In a branching music system the musical pixels of any individual archbranch are already musically designed to suit static game states. Therefore this system achieves aesthetically appropriate continuous music within both transitional periods and static periods of game play. Assuming the composer has competent skill at avoiding monotonous moments in their music, the branching music engine should never be found to be monotonous. The limit of monotony in a branching music system is therefore something intrinsic in the composed music and not a property of the engine itself.

A generative music system like GMGEn is not as successful at producing music for long static periods (time periods greater than a minute in duration) due to an eventual onset of monotony. Most game’s static states will generally last for longer durations than transitional states; therefore, ways in which the system can vary the musical personality must be included to avoid monotony. Musical personalities (or master-presets) in GMGEn are only designed to maintain musical interest for approximately one minute, therefore upon extended listening to any individual personality the reader will notice that a limit exists after which a decline in the musical interest of the personality occurs. Though the exact times may differ between each master-preset, this generality is true for all presets.

In the construction of GMGEn efforts have been made to avoid the perception of monotony in the short-term. These efforts also indirectly increase the time for which a particular personality can be played. In GMGEn this technique assumed that an appropriate balance of both surprising and expected musical moments are needed to maintain the listener’s interest. This suggests that a level of predictability in the music is desirable to meet the expectations of the listener. GMGEn takes advantage of this by looping and reusing short memory fragments within a subsection of a single musical personality, and further modulates them to avoid monotony. This achieves two things simultaneously: it creates predictability by repeating short patterns of music, while maintaining musical interest by modulating these patterns into a different harmonic-cloud. In this example, invariance is found in the pattern of the microcosm and provides a stable point for which the listener can pleasantly predict the short-term future of the music. The aleatoric modulation provides the necessary musical surprise, which allows these short-term loops to avoid becoming monotonous too quickly. Revisiting these saved patterns and juxtaposing them with other saved patterns treated in the same way creates a stable surprise-prediction balance within a single musical personality. In GMGEn, eight patterns (or subpresets) are composed for each musical personality (also master-preset). Although adding more subpresets would increase the non-monotonous duration of any master-preset the eventual onset of monotony would still be limited by the quantities and qualities of this material. The number of subpresets used for any personality will be dependent on many factors relating to the specific game state it is designed for.

There are situations when the long-term memory faculty of the listener can be utilised by the game music composer. The composer may wish to attach musical motifs to events or situations throughout the entire game in order to emotionally affect a player in future situations that may increase their level of immersion. Creating musical motifs that inhabit the same temporal position as certain characters, locations or emotions (among others), will to some degree make these motifs perceptibly inseparable from them. Once these motifs have been established it is possible to trigger the same emotional response in the listener again.[1] Clarke shows that ‘the identification of a characteristic motivic/harmonic procedure…are behaviours that networks…can be shown to demonstrate after suitable exposure’.[2] Clarke’s point directly applies to the network of a human brain. The listener would be remembering a previously heard music and connecting that to a previous emotional state. This type of musical prediction is a long-term version of the short-term musical prediction described above. Therefore, short-term musical memory comes from recognising the patterns of music in the present and extrapolating into a near future, while long-term musical memory is the exact memory of the microcosm of music over a large period of time, several times more than the length of a single listening. To give a typical example of this phenomenon take a person who played through Final Fantasy VII around its release in 1997. This player may still retain much memory of the music when replaying the game again many years later regardless of having little or no contact with it in the interim. While the reactive agility of GMGEn is positive and the design of this system fully complements the reactive situations found in almost all game scenarios, it does not create music memorable over these long-term periods. It will therefore not provide the composer with access to the emotional affecting power of motivic attachment techniques and they will ultimately not be able to use these techniques as a way of affecting the player and increasing their immersion.

The motivic attachment approach (leitmotif) has a secondary benefit to the video-game music culture in that it can provide a great deal of nostalgic feeling for the player whether during a single playthrough or across multiple playthroughs. While the pursuit of memorable music in this paper is primarily to heighten the immersive experience for the gamer, some consideration of this nostalgic benefit is important to this discussion. Musical motifs often occur throughout multiple games in a particular series. As discussed in Chapter One, the Final Fantasy battle music has a very particular style associated with it and in many of the earlier games a particular bass guitar introduction can be heard. Moreover, the Hyrule theme is heard in almost every game that is part of the Legend of Zelda franchise.

The obvious cause of this long-term memorability and subsequent nostalgia associated with these scores can be attributed to the vast amount of repetition found. This is not only due to the looping of scores but also that the same tracks can be heard in many different locations or in multiple scenes and scenarios in the game. Taking Final Fantasy VII as a case study: four and a half hours of scored music exist but the game may take over thirty hours to complete on a first attempt. Therefore, the score will be repeated between six and seven times during this first full playthrough. Further, the listener may not hear the full four and a half hours of existing score due to them not choosing to visit (or simply not discovering) certain locations, or experiencing certain scenes where unique portions of the sound track might be played. This further contributes to the number of likely repetitions. Players drawn along by the game’s plot elements are therefore required to listen to this music repeatedly resulting in the music becoming subconsciously embedded in the player’s memory.

An engine with the ability to execute long-term memorable music will enable the video-game music composer with access to the immersive influence that motivic attachment techniques allow. A branching music engine’s music is memorable over long periods of play and across multiple plays because it creates an exact microcosm on each play. Conversely as GMGEn cannot create these situations, the branching music engine has the edge on this criterion. An ideal system is achievable in which the user receives benefits of both long-term memorable segments of music containing static microcosms (branching) while still receiving the reactive scoring a dynamic music engine brings (generative). The obvious step made here is to create a third engine hybridising the two previous engines to exploit the strengths of both in their best situations. Therefore, a branching system populated with pre-composed music will provide the composer with the ability to emotionally affect a player over long periods of play. A generative system producing musical personalities will provide the reactive flexibility needed by the potential fluctuating game states. The generative system will be used to transition music while the branching system will be used to occupy static game states.

I appropriate terminology for this system from scientific dynamic system models resembling the proposed hybrid system. The term intermittent is used to describe systems that show periodic behavior within chaotic phases. Musical intermittency can be seen as a metaphorical representation of the mathematical system rather than an exact rendering. The ostensible semantics of this term are readily understandable and appropriate for describing the general behaviour of the proposed system; therefore, the differences between the mathematical and the musical are a negligible concern. In short, musical intermittency here will describe an engine that switches between two distinct states. Part of the disparity between the mathematical and the (now) musical intermittency models is found in the creation of the intermittent behaviour. In mathematics, intermittency is observed naturally, it is not enforced upon the system by artificial means. It simply occurs, and is therefore created by the same physical laws governing this universe. In a dynamic musical system intended for video-games, the intermittent behaviour would be artificially enforced upon the music under precisely triggered conditions.

The intermittency engine will have a pre-composed branching side and a generative side constituting the engines explained in detail in Chapters One and Two. Each side of the intermittency engine will deal with one of the two different states of game play. As has been explained before these states are roughly approximated as either static or transitional. The generative side of the engine will deal with transitional states to allow reactive agility. The branching side of the engine will accompany static states to allow a long-term memorable microcosm to become established within a player.

To allow for pre-composed music to be played there must be stable periods of time (time-spaces) where the game state cannot change. These time spaces occur as part of a whole static state. In these time-spaces we would be waiting either for temporally known events to run their course or in a situation where, for a known duration of time, the player would be unable to trigger an event that could require a change in the music. During these stable periods of time-space it is unnecessary to change the music and therefore the music accompanying these sections can be linearly composed. It is at these points that the composer can insert affecting motifs drawing upon the long-term memory of the listener, reminding the player of particular places, people or emotions. These stable time-spaces exist in various scales and at various locations within a typical game. Their exact properties are dependent on extremely specific situations and options available to the player and therefore are unique for every game. Different programming techniques are necessary to determine exact durations for a specific static time-space; however, from the composer’s perspective once the exact duration of the time-space is determined they simply need to write appropriate music for this exact duration of time. In certain situations it may be beneficial for multiple versions of the music to exist but this is a further consideration beyond the scope of this paper. The separate states of this engine and the compositional properties of the music they provide can be described using a visual analogy. As the stable time-spaces are occupied by determinable music they can be seen as pre-composed islands. In this analogy, the determinable music of the islands are juxtaposed by the indeterminable music of the generative musical seas surrounding the island.

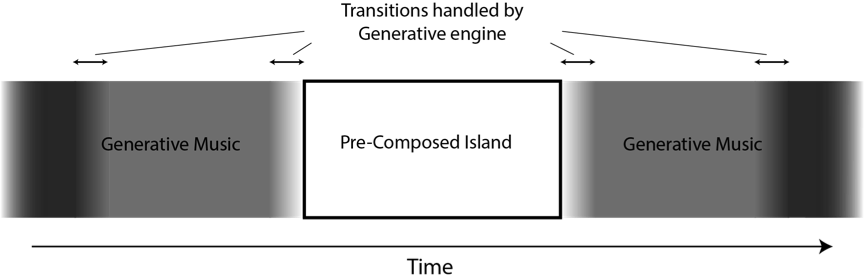

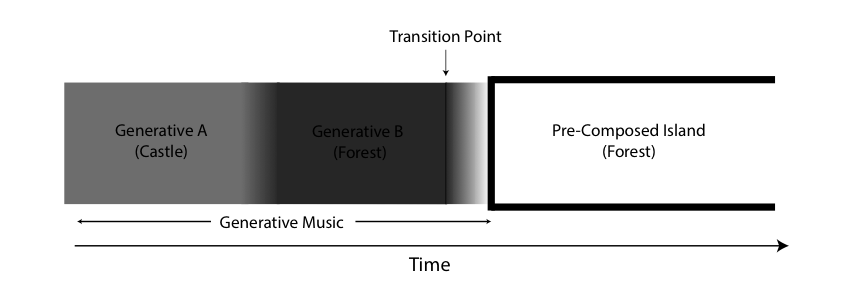

Figure 11 shows an image of a pre-composed island of music inside the chaotic (here grey) sea of the generative music with the time domain running from left to right. This image is useful for illustrating the presence of two sides to the intermittency engine as well as consolidating the analogy above. The obvious borders of the pre-composed island are ridged to represent the stability (and definability) of the time-space and the musical microcosm contained within. Transitional periods between generative musical personalities are shown as a blending of two colours to represent a period of time where qualities of both musical personalities exist simultaneously, due to the transition. Each linear end of the diagram shows the blending, or transitional period, between two musical personalities. Two different musical personalities within the generative engine are shown as two different shades of grey.

Figure 11 – Graphic score showing the implementation of Pre-Composed Islands of music within Generative Musical Seas, which handle transitional phases.

I wish to illustrate how the intermittency system might be implemented within the two scenarios upon which this paper has already demonstrated possible improvements: the traditional Final Fantasy battle system discussed in Chapter One and a more spatially (2D or 3D) explorable (open world) adventure game. In the examples below I will explore the transitions between each side of the intermittency engine. First, I discuss the transition from the pre-composed side to the generative side using the Final Fantasy battle system explained in Chapter One.[3] Second, I will discuss the opposite transition using the case study of a more modern game, Rogue Legacy.[4]

The intermittency engine would have a pronounced effect on the music of the battle system found in the earlier games in the Final Fantasy series. Chapter One analysed the typical structure in this scenario and found that the greatest aesthetic discontinuity was found during transitions at the end of a battle where the battle music is cut short and a new victory fanfare begins; this was illustrated in the Demonstrations_Application.[5] My aim was to connect these sections of music or design a reactive ending to the battle music.[6] The branching engine addressed the issue with the disjointed transitional music heard in this situation. Using an intermittency engine the transition between overworld and battle would still be handled by pre-composed music requiring no designed control over the transition. I argued that this particular transition was aesthetically connected to the narrative potential of battle and so does not require change. The main body of music would also be handled by pre-composed sections of score thus providing a memorable musical microcosm for the player. In the intermittency engine, the final transition from the battle music to victory music would be handled by a change in musical personality that the generative side of the engine would produce. This is because these sections of gameplay only last for a short duration. It is prudent to consider appropriate game triggers where the engine can be switched from the pre-composed side to the generative side to handle the transition.

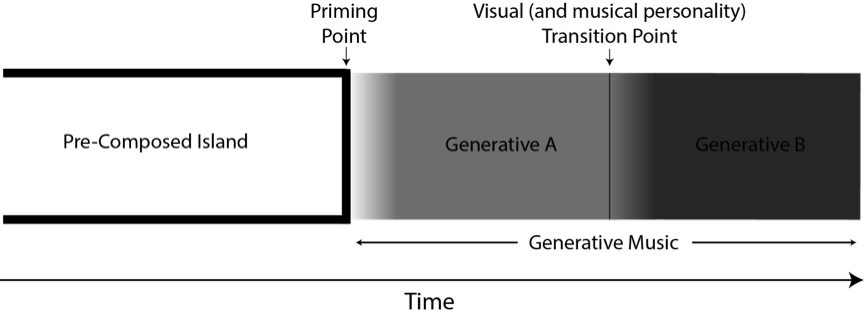

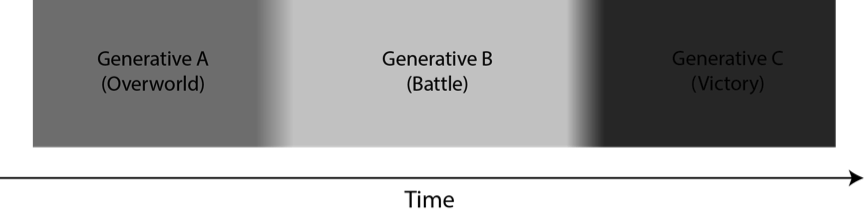

A switch between pre-composed music and generative music must be made prior to the point at which the visual (and musical personality) transition would take place; therefore, requiring two triggers to make an full musical transition: first, the priming point, where the system switches to a generative side; and second, the visual transitioning point, where the engine begins to make the musical changes between two personalities (see Figure 12). Figure 12 shows the priming point at which the generative engine takes over and starts providing generative material similar to the pre-composed music (Generative A), and the point at which the generative music engine shifts personalities (into Generative B) synchronising with the visuals at the visual transition point. The Final Fantasy battle system offers many game states that could act as priming points. One suggestion is for a priming point to be based on an enemy-health threshold. Once the enemy’s health is below a certain value, for example ten percent, a trigger message would be sent and the engine would transition between the pre-composed side to the generative side. Another threshold trigger, for a priming point, might be taken by roughly estimating how quickly the characters could end the battle based on their current strength compared with the enemy. There may be several methods for obtaining this data, which the game designers would have to decide upon during development. The latter achieves this goal to a greater extent across the entire traditional game setting as during the later stages of the game the player’s characters will become much stronger than the majority of enemies they will face meaning that the characters can often execute a one-hit knock out (KO). Further, in this case, with the priming point being likely to occur straight after the opening introduction music this would mean that for one-hit KO battles, or generally shorter fights, the intermittent music engine might skip straight to the generative side after the pre-composed introduction. In consideration of this the analyst can expect the intermittency engine to write music with two emergent structures. In the event the battle takes a ‘long’ time (defined here as the system having enough game time to reach pre-composed music in Section B, described in Chapter One) the emergent structure would resemble that shown in Figure 11. In short battles where the system is unable to reach the pre-composed island the structure would resemble that shown in Figure 13. In this case it is preferable to employ several different priming points designed to create the largest duration possible of pre-composed music under the specific conditions of the battle. The intermittency engine also has utility outside the specific battle situation and can be used to score narrative portions of the game also. The fact that this engine tackles the root elements of all gameplay enables it to be used in many other game states and in other game genres.

Figure 12 – Priming Point and Visual Transition Point locations

Figure 13 – Structure of music in game states unable to reach pre-composed islands due to highly transient natures (suggested personalities for Final Fantasy shown in parentheses).

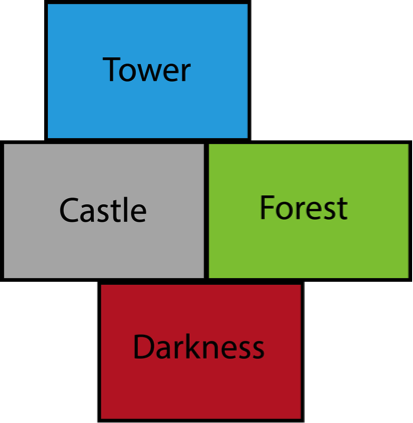

Consider a 2D side-on platforming adventure game such as Rogue Legacy.[7] The generation of levels in Rogue Legacy is achieved in a macroscopic way. In this game the user will traverse through a land divided into four separate areas known as the Castle, Forest, Darkness and Tower (see Figure 14). The larger areas are always in the same position relative to one another and individual rooms within the larger areas are generated differently each time. An analogy can be drawn between the method with which level generation occurs in Rogue Legacy and the way in which musical personality generation occurs in GMGEn. The player starts in the castle and will find the tower upwards, the forest rightwards and the darkness downwards. Implementing an intermittency engine in this scenario would link each of these areas with their own musical personality, which has a predictable macrocosm and an unpredictable microcosm, mimicking this world’s geography. These personalities would create the foundation for the transitions between zones. Although less obvious than within the Final Fantasy battle system above, there are still adequate moments in which a switch can occur between generative side of the engine to the pre-composed side. Figure 15 illustrates the significant points necessary for transitioning in this direction, from the generative engine to the branching engine.

Figure 14 – Rogue Legacy’s macroscopic world generation area positioning

Figure 15 – Transitioning into pre-composed music within the Intermittency Engine

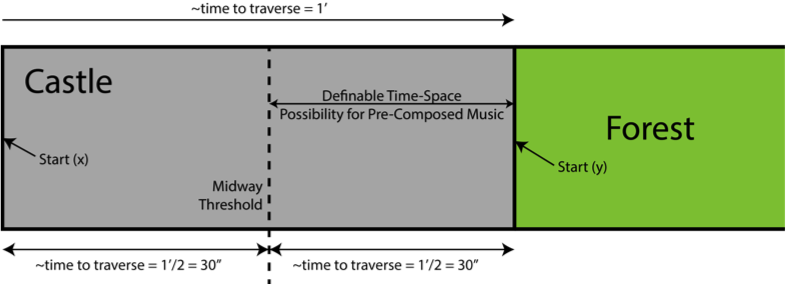

For the transition from the generative side to the pre-composed side of the intermittent engine to occur we must find an appropriate transition trigger within the game. In Rogue Legacy, the player takes time to traverse from one area to another. Giving a rough estimate for this specific scenario, the shortest time taken to get to the forest from the starting location (leftmost in the castle (see Figure 16)) takes approximately one minute. Let’s assume that even given an optimum route generation built for getting to the forest as quickly as possible a user cannot get there in less time than one minute. This means that there is definable duration where the player can only be in the castle zone and under no circumstances is it possible for the player to be anywhere else that would require different music. This is a simplified test scenario and does not take into account other features of this particular game; however it is still true that if an assumption of this nature can be made consistently and accurately within any game then the proposed duration can be filled with pre-composed music. Improving the accuracy of an assumption requires a complete knowledge of all possible game state transitions but can often be simplified in a number of cases. The previous assumption about the definable duration within the castle would not be true if, for example, the user actually starts in the forest and transitions into the castle zone (start y in Figure 16). We would expect to hear castle music but we can no longer assume that it will take the user one minute to return to the forest as the player is much closer to the forest now than they were at the original starting location; therefore, we cannot simply start a one minute long piece of pre-composed music because the player may choose to turn around and re-enter the forest having only spent a very short period of time in the castle. This would result in any pre-composed ‘castle’ music now being unintentionally played in the forest. In this case the trigger for the switch to the pre-composed side of the engine must come at a different point. A point where the player is as far from any game-state transition as is possible.

Figure 16 illustrates a more advanced trigger able to select an appropriate point to switch the intermittency engine to the pre-composed side during a definable time space. As our starting positions we here take both the original starting location (start x in Figure 16) or the entrance to the forest (start y in Figure 16). Adding a midway threshold as a trigger allows us to calculate a definable time space from the moment the player crosses the threshold. Upon crossing this midway threshold in either direction the player now must travel for at least thirty seconds to reach the forest regardless of which location they started at. Although this creates a smaller time-space for pre-composed music than was in the first example, it is the only time-space which is definable when multiple starting locations (start y or start x) are possible. These examples also show that pre-composed music filling this space needs to be short enough for the eventuality that the player moves directly towards a game-state transition point and will therefore take the shortest amount of time possible. Again, much focus should be given to creating ample numbers of transition triggers (towards the pre-composed side) that enable an intermittency engine to produce the largest amount of pre-composed music possible to enable long-term memory of the music across playthroughs. Employing branching music techniques within pre-composed islands would create opportunities for larger islands and would provide more compositional interest to these sections.

Figure 16 – An appropriate in-game trigger to switch the intermittency engine.

Hardware demands remain trivial when implementing an intermittency music system for most conventional gaming technology. Hybridisation of the branching and generative engines combines to an averaging of their hardware demands. An intermittency engine would exploit the use of many different pre-composed sound files like that in a branching music engine but would have vastly smaller pools of those sounds. Therefore this would not create the same level of demand for drive real estate as a branching engine but would require more than a generative engine. Memory demands are greatest in the branching engine, less in the intermittency engine and lowest in the generative engine. When compared with other simultaneous demands in the video game context, processing demands are not high for either a branching system or a generative system; therefore, under a worst-case scenario an intermittent system could not exceed whichever were found to be the highest. The prime bottleneck occurring in all three of these different engine scenarios is due to the reading of the sound files from a hard disk. This occurs because of the physical time it takes the read-arm of a hard disk to read the information on the disk.

A hard disk works in a similar way to vinyl records except that instead of an extremely fine spiral over the record’s surface there are concentric circles; to read a block of memory the read-arm must move from one concentric circle to another. This takes approximately ten milliseconds per move and can therefore add up if the memory blocks needing to be read are large or separated on the hard disk. At the time of writing the solid-state drive (SSD) is becoming a commercial standard in new computers. An SSD is able to read any block of memory without having to physically move a read-arm to different locations on a disk. This effectively minimizes time taken to access information. As SSDs become a standard in gaming technology the issue of memory read bottlenecks disappears. To summarise: current technology can trivially handle the loads of the most taxing musical engine discussed in this paper, the branching engine. The technology of the near future will therefore make any hardware load issues of a less taxing engine, such as an intermittency engine, negligible.

Before rigorous testing of an engine working in many different active scenarios is done it is difficult to forecast negatives onto the proposed intermittency engine. This game engine is complementary to the game scenarios proposed in this paper but will also work in a variety of others. An arguable, yet subjective, weakness can be found in those scenarios where game-states change extremely quickly. These scenarios would give the intermittency system less chance to settle into pre-composed music. In this case the intermittency engine would produce music from the generative side and would not provide the full aesthetic experience this engine is designed for (see Figure 13).

This paper has thus far only considered an intermittency engine where the pre-composed music is of a low musical resolution (discussed in Chapter One). If this resolution were raised, the intermittency engine could also suit the needs of games with particularly frequent game-state transitions. Moreover, it is in these types of scenarios that I believe the intermittency engine is likely to produce the greatest degree of success at creating memorable, aesthetic, continuous music, to provide greater immersion for the player, within this dynamic medium in the future.

[1] Clarke, pp. 30-31.

[2] Clarke, pp. 30-31.

[3] Final Fantasy VII, Playstation Game, Squaresoft, Japan, 1997.

[4] Rogue Legacy, PC/Playstation 3 Game, Cellar Door Games, 2013.

[5] Tab 1 and Tab 2 of the Demonstrations_Application

[6] Tab 2 in the Demonstrations_Application

[7] Rogue Legacy, PC/Playstation 3 Game, Cellar Door Games, 2013.