This chapter explores the possibility for a generative music engine to make many of the choices needed for a reactive dynamic score for video game implementation. It also shows how this can be achieved while still having a great deal of control over the resulting output of the program and ultimately the power to create aesthetically appropriate scores for particular states within the game. I first briefly discuss the issue of authorship and what these considerations might mean for the concept of creativity. I discuss what might constitute a musical artificial intelligence and justify the use of this term within this project. The majority of this chapter discusses the founding of basic musical material and how to model these within a generative system. Discussion is grouped into subheadings with main issues including: rhythm, melodic contour and harmony. Finally I describe the Game Music Generation Engine (GMGEn) and the way in which it creates musical ‘personalities’ to implement a state-based musical composing engine within a video game.

As David Cope states, ‘computers offer opportunities for style imitation’ and ‘have been successfully used in automated music (Hiller and Isaacson 1959), algorithmic composition (Fry 1984), and as stochastic probability generators (Xenakis 1972), to name a few’.[1] Generating computer-composed music shares many similarities with generating human-composed music, therefore many of the considerations of traditional composing can be applied and included in the program. The system will be designed to process random inputs to create musical composition.

Cope, the creator of a musical system, named Experiments in Musical Intelligence (EMI, a.k.a Emmy), which takes a corpus of music in a particular style and generates new music in that style, has discussed the issue of authorship. In an interview, when asked whether Cope listens to Emmy’s music as he would the music of human composers he states that ‘Emmy’s music is ‘human’ as well’ and that his computers simply follow his instructions.[2] He further directly states that he claims authorship over Experiments in Musical Intelligence’s works.[3] Through my work with the Game Music Generation Engine (GMGEn) I do not claim direct authorship over the scores created, but do claim authorship over GMGEn itself and by indirect means, the scores it produces. A human’s involvement in the sonic output of this computer-composed music is therefore indirect but remains present. The reader is directed towards Arne Eigenfeldt’s article for a concise explanation of the history of interactive music.[4]

It may seem that we have reached a twilight of human-composed music if a program is able to appropriately accompany on-screen action without the input of a human. Andy Farnell writes that computer programs and algorithms such as ‘expert systems, neural networks and Markov machines can codify the knowledge of a real composer but they cannot exhibit what makes a real composer, creativity.’[5] Farnell is illustrating that the computer is unable to break free of its digital make-up in this sense. Although expert systems, neural networks and Markov machines are artistically useful when within musical composition, they do not direct their output towards human goals as human intelligence does. This is why, when applying small changes to their systems, it is no longer possible to guarantee that humanly expected musical congruence occurs from the output. Therefore, as Axel Berndt claims, ‘the human artist… is still indispensable!’[6] Fundamentally Farnell and Berndt are correct; a computer will never do anything definitively creative in its composition of music, but will (currently) only ever follow its programming rigidly and accurately; however, I contend that absence of (musical) creativity can only ever be measured in the terms and limits of the musical observer. As the observer is human, then human limits of perceptibility must be taken into account.

For the interactive situation of a video game, if a generative music program can improvise music to a level which a human listener believes it an appropriate aesthetic accompaniment to the on-screen action it may be perceived as a musical artificial intelligence. In considering the composition of interactive music, Winkler states simply that ‘computers are not intelligent. They derive their appearance of intelligence only from the knowledge and experience of the person who creates the software they run.’[7] I take the suggestion from Winkler that a person skilled in computer programming as well as in the art of musical composition is preferrable to create a program capable of convincing a human listener that the dynamic computer-generated music is linear music composed by a human. Further, W. Jay Dowling agrees that ‘it should be possible to integrate appropriate artificial intelligence techniques to construct an expert system which improvises.’[8] Jesper Kaae states that for a listener, ‘music will always be linear, since music exists in a length of time’, and so the challenge becomes only to create a convincing music during this time.[9] These methods are useful when building a generative music engine. George Lewis’s interactive work Voyager (1993) has already demonstrated the strength of ‘music-generating programs and music-generating people’ working towards the same goal.[10]

When used in the dynamic context of video games, generative music holds a true key towards combining musical audio with onscreen action and aiding immersion. Tab 2 in the Demonstrations_Application demonstrated that the modern computer’s capabilities extend beyond what was necessary to make a working piece of reactive dynamic music. Winkler suggests that traditional models of music making are a useful starting point for interactive relationships however, ‘since the virtue of the computer is that it can do things human performers cannot do, it is essential to break free from the limitations of traditional models and develop new forms that take advantage of the computer’s capabilities.’[11] Given this excess of computer processing power in a branching music system using pre-composed music, it is a natural further extension to allow the computer control over greater musical detail than simply musical structure like that in Tab 2. We can use this to adjust elements of music so as to create an aesthetically appropriate generated music for use in the video game context. Below I will discuss some of the necessary considerations that must be taken by the composer-programmer within this medium.

An approach to musical composition can occur as either top down, where the composer may design large structures before working on detail, or bottom up, where small musical segments may be built into larger structures, or a synthesis of the two approaches is possible. Programming requires a similar approach to understanding goals on the macro scale and achieving them with methods on the micro scale. During the process of programming and composing, some new features can be found to be necessary, or beneficial, while working at the micro scale that were not foreseen when designing at the macro level. When discussing Lutoslawski’s use of aleatoric methods, Charles Bodman Rae defines these as a form of constrained randomness, ‘a framework of limitation, a restricted range of possibilities’ where ‘the full range of possible outcomes is foreseen.’[12] Aleatoric methods also lend themselves well to computer programming and generating music and are therefore used heavily in the construction of GMGEn and its output.

In defining a macro goal for this project, the aim is to create a generative musical engine, an expert system, which can produce continuous music in a human controlled style. It will have the ability to combine fundamental elements of music into single musical lines. Six of these lines will be combined in a number of ways to create a rich variety of time-independent musical spaces. When complementing spaces sequentially follow one another, a consistent musical style is created which has internal variety and external reactive potential. When non-complementing spaces sequentially follow, a style transition takes place and can be used in the video game to accompany game state changes of more varied degrees to enhance the player’s immersion.The macro-scale goal of this paper is achieved by completing three stages of work on the micro-scale. These stages represent the reducing of a problem ‘to a set of subproblems’.[13] Simplification creates opportunity for addressing issues individually as well as the musical considerations the composer must make. In the first stage of work, I will lay out basic attributes, or typicalities, of separated musical elements that will act as a sounding board from which to elaborate when constructing a musical artificial intelligence. Second, I will discuss how these attributes can be modeled in a MaxMSP prototyping environment. Third, I will build a working generative music engine, GMGEn, which will combine all the components discussed and feature a usable user interface. It will exist as a proof of concept and a standalone work of generative art within my final portfolio. Although some scientific terminology, explanation and methods are used, the success of the project will be judged in a qualitative fashion, as is appropriate for the project in artistic terms, and upon criteria intrinsically tied to my own artistic inclinations and experience in composition.

Typifying rhythm is probably one of the most difficult aspects of this project. Grosvenor Cooper and Leonard B. Meyer completed a comprehensive study on rhythm, which typifies it from the simple to the complex.[14] Cooper and Meyer organise their definitions into architechtonic levels so as to define different sub and super groups of rhythmic construction.[15] Their analysis of rhythmic architechtonics complements a bottom-up approach to the programming of this rhythmic engine. In scale order, from the smallest to largest, this section will discuss the attributes of pulse, duration, micro-rhythm, meter and macro-rhythm in GMGEn.

Pulse is the lowest architechtonic level of rhythm in GMGEn and constitutes an unbroken regular unit of time that is non-distinct within a single section. It is a unit of time from which all other durational values are derived and is created by setting a metronomic impulse (in milliseconds) and broadcasting it throughout the program at any location requiring synchronisation to, or information from, the pulse.

Duration is a unit of time applied directly to a sound and is distinct from pulse in that it can hold many different values within the same section. In GMGEn, duration for a single sound is obtained by taking the value of the pulse (in milliseconds) and dividing or multiplying it by any factor required, then returning this value to the sound as its duration parameter. The sound will then play for the number of milliseconds held in its duration parameter.

Micro-Rhythm is the combination of two or more durations played in sequence by one line of music. In essence this is the level of the rhythmic detail of the piece. This is achieved in GMGEn by combining new sounds with new durations. Durational values are taken from memory or generated on-the-fly depending on the type of rhythmic output the user has selected.

Meter is ‘the measurement of the number of pulses between more or less regularly recurring accents. Therefore, in order for meter to exist, some of the pulses in a series must be accented—marked for consciousness—relative to others.’[16] In GMGEn, metric groupings are achieved part by chance and part by constraint, an aleatoric method. First, a total group duration, or bar, will be determined and will be found by multiplying the value (in milliseconds) of the pulse by the number of beats in the bar (chosen by the user). Once a total bar length is obtained the program will generate a random duration from available options. This duration will then be subtracted from the total duration and another random duration will be generated and also subtracted from the total duration. This happens recursively until the total bar duration is filled.

Macro-Rhythm is a concept applying to the rhythmic structure across larger architectural boundaries or phrases. In GMGEn a phrase constitutes a number of grouped bars. Larger super-phrases are possible by grouping a number of phrases together and theoretical hyper-phrases might be groups of super-phrases and so on. In GMGEn, a single phrase is obtained by allowing the program to store many filled bars of durational values, which it has generated, and recalling these values in future bars. Allowing the recall sequence to be similar or the same as that previously generated produces a phrase. This can also be done in a more immediate way by making the program regenerate a whole phrase worth of durational values at a point during playback. Adding the ability for the program to change either meter or durational values during playback creates further variety of phrases.If particular patterns were applied to either regenerations or changes of meter (or both) this could structure the music into typical western classical forms such as sonata or rondo. The reader is directed to the works in the InteractivePortfolio_Application accompanying this paper, which demonstrate the concept of this generated music within structures found in the western classical traditional.[17] GMGEn uses all of these techniques to produce every level of rhythmic architechtonics.

As with rhythm, typicalities of melodic contour must be considered before attempting to program a melodic generator. In GMGEn the goal of a typical melody is to move between pitches with a majority of stepwise movement and a minority of non-stepwise, or leaping, movement. Here a stepwise movement is defined as a movement from a pitch that is one half, or one whole, tone away from the previous pitch. A leap being defined as a movement from a pitch that is further away than one whole-tone from the previous pitch. We can use random number generation to determine an individual pitch to be played by the program. This is achieved in GMGEn by linking particular pitches to number output from a random number generator (hereon RNG). For instance, six possible numbers can be assigned to the following pitch values: 1 = C, 2 = D, 3 = E, 4 = F, 5 = G, 6 = A. While we can assign neighboring numbers a pitch interval that equals a step we cannot determine that the RNG will generate neighbouring numbers in sequence.[18] Therefore simple random number generation does not model these goals.

From this we can determine that a higher-level function is needed for a mostly-stepwise melody to be produced in GMGEn. This function controls the difference between two consecutive number outputs, in this case constraining them to closer degrees. Using the same range as above (1 to 6) and adding a second stage hierarchical RNG with the range of (-1 to 1) will achieve this. This will be called the step meter. If the RNG outputs a 3 value and we take all possible values of the step meter (-1, 0, or +1) the next output can only be either 2, a 3 or a 4 value (3 ± 1) respectively. A two stage hierarchical number generator with these parameters will produce a melody that invariably moves always by step.[19] This behaviour is known as Brownian motion; the behavior of a particle of air as it moves through space and interacts with other particles. The motion of a single particle is macro-linear with small micro-deviations.

Hybridising both of the above types of generation in the correct proportions will produce the desired melodic contour. There are two methods for producing the hybridisation to suit these goals. The first method is to allow the computer control over leap-like movements. This function would vary the step range (the second stage RNG) and thus produces phases where leaping is possible (by using a step value greater than 1) and phases where only stepwise movement is possible (by using a step value of 1). Correct tuning of the variables (the ‘on’ time of each phase and the step magnitude) will create the intended type of melodic contour demanded by any current game state.[20] The second method would be to create presets for leaps. For example, a pre-defined leap value that is triggered at certain points (e.g. a leap of a fifth triggered every six seconds).[21]

The first, although more hierarchically complicated, is not difficult to achieve and gives more control to the computer in the design of the melody. The second not only restricts the program’s choices of leap but also predefines the leap value (this could be randomised too) meaning that increased control is given to the composer-programmer as to how the melody will develop. With each of these examples the human creator is exerting different types of control on the pitches outputted (and therefore music generated) by the program.

Harmony and harmonic language are two related constructs in Western art music. The Oxford English Dictionary defines harmony as ‘the combination of simultaneously sounded musical notes to produce a pleasing effect.’[22] Eliminating any issue with the field of musical aesthetics or perception, I wish to remove the term ‘pleasing’ for the course of this paper. I will define harmony to be: the simultaneous sounding of more than one musical note. Any requirements of the combinations of notes exist merely to create a selective harmonic consistency. This is better described by the term harmonic language, a termoften used when defining aspects of a musical work that create harmonic cohesion. Harmonic language is a higher construct that involves the collections of harmonies and how they move temporally from one pitch collection to another. The harmonic terms are analogous to their rhythmic equivalents discussed above; harmony relates to the micro and harmonic language relates to the macro.

When discussing rhythm it was appropriate to use a bottom-up approach, building larger and larger complexity from simpler functions. Here it is more appropriate to take a top-down approach. To maintain harmonic cohesion over large sections of a work the compositional technique of using pitch-class sets will be employed; pitch-class sets being the arbitrary choice made by the composer to use only a specific collection of tones to construct a whole piece or section of a piece. The harmonic language for GMGEn will be limited to four specific pitch-class sets. Implementing pitch-class sets allows the program freedom to choose any of the notes that it wishes from those provided and will produce reliable harmonic combinations based on any two (or more) of the set pitches.[23]

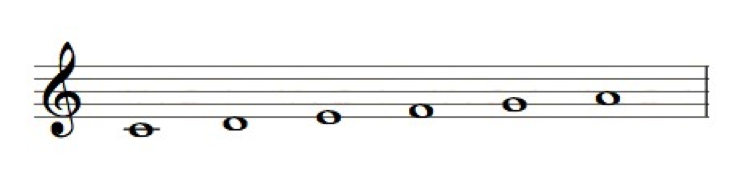

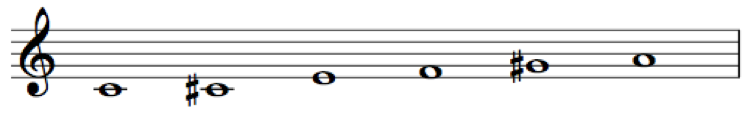

Figure 4 – Hexatonic Major Pitch Class Set

The hexatonic pitch class set of C, D, E, F, G and A (see figure 4) can be selected by the user within the patch interface. If many lines of music are all bound to this same pitch-class set then the music generated will be major-inclined. This means that the music produced will not evolve harmonically because it is unable to play any but the six notes provided.

The reader can see that any two-note combination taken from these six produces a chord that adheres to the certain harmonic properties contained within this pitch-class set. This is true for any trichord also.[24] It is the intention that any number of these pitches sounding simultaneously will produce chords that contribute to a consistent harmonic flavour. In essence, what will be created is an area of highly predictable, yet indeterminate, harmonic content. Winkler illustrates how the technique of constrained randomness allows a composer to set up ‘improvisational situations where the music will always be different and unpredictable yet it will always reside within a prescribed range that defines a coherent musical style.’[25] The same concept applies here and allows the creation of a coherent harmonic language.

Though this area of harmony is predictable, it cannot be momentarily determined. This is analogous to the concept of an electron-cloud in a molecule or atom. An electron-cloud is the probabilistic 3D shape created due to the fact that no individual electron’s location can be determined accurately. An electron here is the metaphorical equivalent of a pitch in a cloud of harmonically complementing pitches; the pitch cannot be determined accurately but its limited possible forms can be. Instead of the 3D probabilistic shape given to electron clouds, here we have a probabilistic sonic outputs. Given the analogous representation of electron-clouds to a cloud of harmonically complementing pitches I am appropriating the terminology from physical chemistry to describe harmonic-clouds.

This harmonic-cloud technique is different to that of composers such as Iannis Xenakis, Krzysztof Penderecki and György Ligeti who all, Jonathan W. Bernard agrees, deal ‘directly with masses of sound, with aggregations that in one way or another de-emphasized pitch as a sound quality, or at least as a privileged sound quality.’ GMGEn does not create sound masses as complex as the work of the composers here. What is discussed in the section above is a cloud of potential, and not actual, sounds. The actual sound produced, because of GMGEn’s limit of six simultaneous lines, can at a maximum be a chord consisting of six simultaneous pitches, a number that is far from considered a ‘mass’ in the same terms as those composers above.

When discussing aleatoric harmonic sections in the music of Lutoslawski, Rae notices that, similar to that proposed above, ‘all such aleatory sections … share the disadvantage of being harmonically static’ and further shows how Lutoslawski avoids stasis by ‘composing some passages that move gradually from one chord to another.’[26] While Lutoslawski blends two of these masses together forming three distinct sections, in GMGEn I chain the end of one harmonic-cloud to the beginning of a second to create a harmonic evolution and avoiding staticity. This, in essence, is a dynamic music chord progression: areas of highly predictable potential harmonic character chained together to create harmonic-cloud progression. With rhythm we were able to regenerate small sections to produce phrases, with harmony we can control the chain of harmonic-clouds to produce our own harmonic evolution.

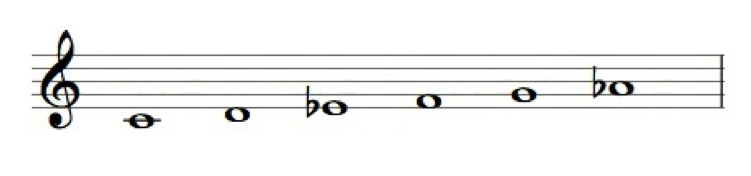

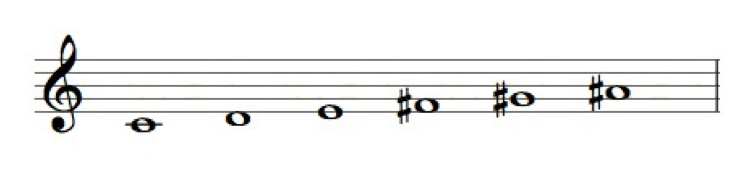

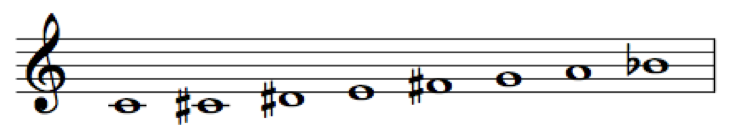

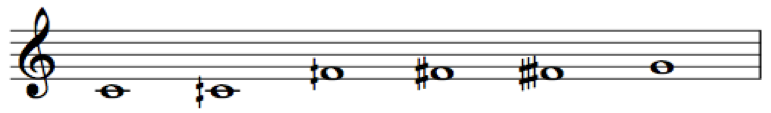

It is important to note the variables of note choice and harmonic-cloud duration in the case of harmonic-cloud control. The changing of these variables will adjust the output of the sound dramatically and provide an effectively infinite amount of variation. These variables can be statically assigned by the composer-programmer for the computer to automate, or a further higher level of control can be built to allow the computer control over these aspects of the music. To demonstrate this, a small selection of pitch-class sets will be used for the note choices of individual harmonic-clouds. The aim is that these pitch class sets will have unique qualities that will give the harmonic-cloud they are assigned to an individual quality. Within Tab 4 of the demonstration patch there are the following four pitch-class sets which are used in GMGEn: A hexatonic major set (see figure 4), a hexatonic minor set (see figure 5), a hexatonic wholetone set (see figure 6), and an Octatonic set (see figure 7). Given these four types of pitch-class set the composer-programmer is able to switch between different sets at will or at any juncture required by the gamestate. The musical effect here is to be able to switch between musical modes at the trigger of a game parameter, and thus allowing harmonic evolution. Switching between the hexatonic major set and the hexatonic minor flattens the third and sixth notes of the preceding set meaning the overall music will change from major to a minor quality, which could be useful for a transition from a town to a castle scene for instance. Constituent pitches of any set could be adjusted creating an entirely different harmonic language for any required game scenario; Tab 4 also includes two further pitch-class sets which are less bound in the traditions of Western classical music (see figure 8 and figure 9 below).

Figure 5 – Hexatonic Minor Pitch Class Set

Figure 6 – Hexatonic Whole Tone

The second harmonic-cloud variable of duration is also simple to control and does not have any deep areas necessary for discussion. If a harmonic-cloud has too long a duration it will become static, however, too short a duration and it won’t have time to establish a harmonic identity on the listener and will diminish from the overall harmonic consistency that a harmonic-cloud is meant to provide. Determining the lower and upper thresholds for harmonic-cloud duration will be relative to the musical context considering other elements of the music. It is likely that with some testing a reasonable formula could be produced which could then be added to a higher level of control given to the computer. This data is not yet available.

Figure 8 – Hexatonic Collection X

Figure 9 – Hexatonic Collection Y

GMGEn

A more detailed look at what GMGEn achieves demands discussion on which aspects of music it has control over. Partitioning a complete work of linear music into two contributing ‘cosms’ will appropriately describe these aspects. The first is the exact constituents of the sounds we hear, such as the pitch of a note or its duration; this level is the microcosm of the music. The second comes from the larger complexities that combinations of different microcosms create, and can be called the macrocosm of the music. Features of the macrocosm can include, for example, structure, harmonic language, musical behavior, among others.

When discussing various corpora (bodies of works) to be used in his program Experiments in Musical Intelligence, Cope notices that certain patterns occur in the works of the composers he chose. He calls these patterns signatures and defines them as ‘contiguous note patterns that recur in two or more of a composer’s works, thus characterising some of the elements of musical style.’[27] GMGEn does impose exact patterns between playings but not over small-scale aspects of the music. Cope uses the term ‘signatures’ to describe patterns in the microcosm of music. For patterns in the macrocosm I use the term personality. GMGEn is a generative music engine specifically designed for creating musical personalities. The concept of a musical personality in this sense is analogous to a non-specific musical template, which uses the perception principle of invariance. Invariance, is defined by Clarke as ‘the idea that within the continuous changes to which a perceiver is exposed there are also invariant properties’.[28] For example, in a twelve-bar Blues the performer knows the musical template for the structure and key of the piece; it is important that all players know what these templates are, to allow for complementing improvisatory material to be added.

GMGEn’s templates are not limited to structure as are the templates of twelve-bar Blues. GMGEn has a similar non-motivic approach to form as George Lewis’s Voyager does, in which he describes a ‘sonic environment is presented within which musical actions occur’.[29] In GMGEn, these actions are randomly generated by the lower level functions of programming logic. The ‘state-based’ model, Lewis confirms, is ‘particularly common in improvised music’.[30] By constraining the random values generated in the lower level functions, GMGEn can be said to be acting in an improvisatory way. Further, composition of a state-based generative system directly complements the state-based nature of a video game.

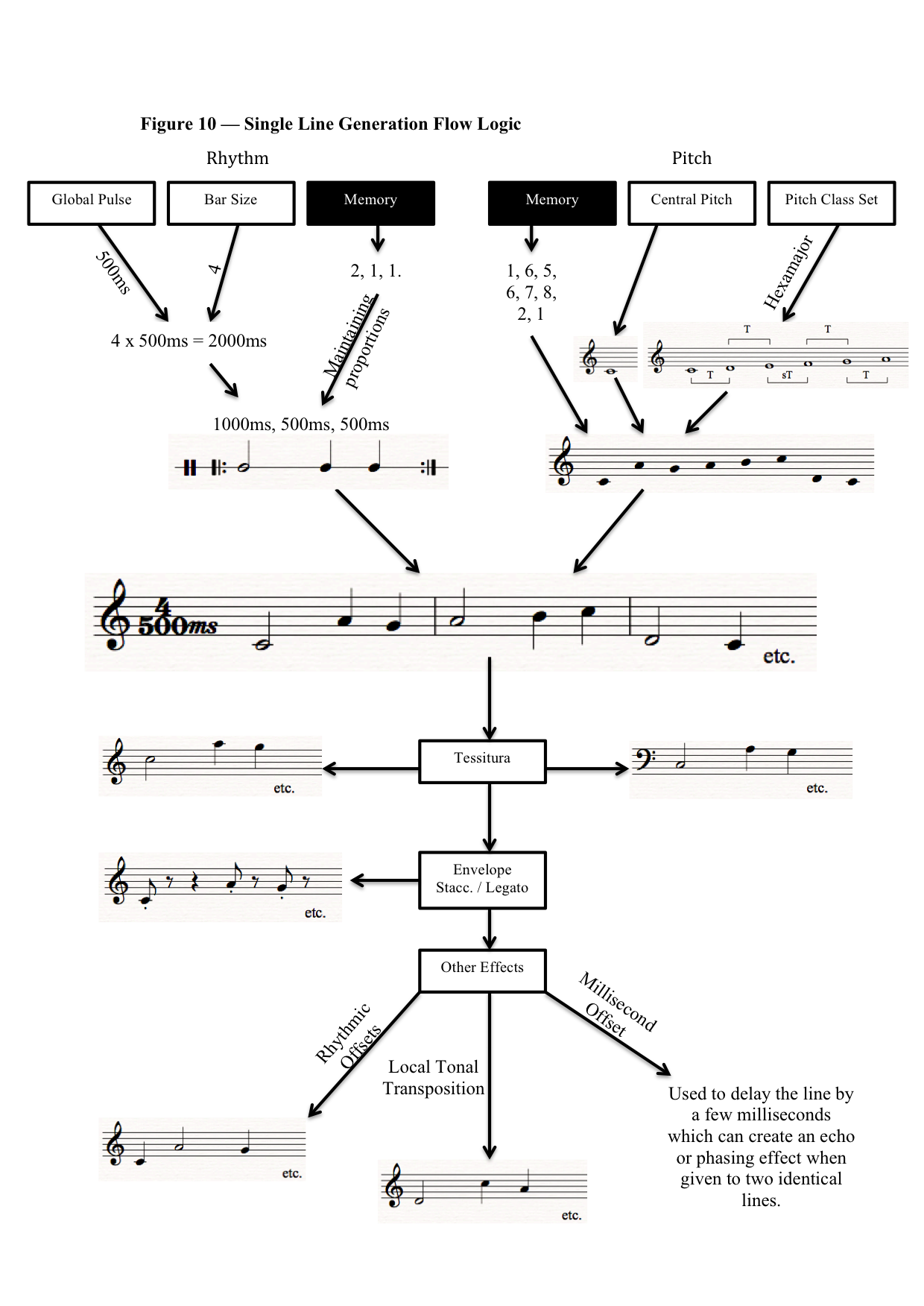

The goal of GMGEn (put forward at the beginning of this chapter) was to create a fluctuating microcosm while also creating a consistent score-like macrocosm, across multiple performances.When the program is first loaded the computer generates batches of numbers and saves them to memory. These memories are the raw material from which all music is generated in GMGEn. Based on many parameters set by the user the numbers are then manipulated through the program to produce output (See figure 10 below for a simplified version of this process). All white boxes in figure 10 show choice parameters that can be changed by the user (or bound into a preset), all black boxes show generations produced by GMGEn. Some possible outputs are shown and used as examples through the algorithm. The lower half of the figure shows some of the effects and processes that can be placed upon the main musical line created, which itself is shown in the centre of the figure. To keep consistency throughout a performance the original memories are recalled, but can be regenerated upon beginning the piece again. The memories could also be regenerated from an in-game trigger if necessary.

Master-presets create a score-like consistency across the macrocosm by effecting any numbers generated in the memories—which are then processed through their logic pathways—in the same ways each time. A master-preset in this sense is synonymous with a specific musical personality. With consistent activation, the same master-preset will produce a similar sounding style of musical composition regardless of the microcosm of its memory, which are randomised each time they are regenerated. The building of master-presets was achieved while constantly regenerating the memories. In this way I was able to adjust variables until the program was producing music that conformed to my intuitions. This way I knew that repeated generations of the memories would create similar music and work proceeded empirically. I have created ten different personalities as a basis for the generated narrative work that accompanies this paper.[31] I contend that it should be considered GMGEn is a musical instrument rather than simply a musical piece; by way of adjusting the inner DNA of the master-presets, GMGEn’s output is as customisable as that of any other acoustic or digital instrument.

The musical transitions GMGEn makes are not designed to be successful in all cases. The artistic reasoning for this is twofold. First, the narrative work it is designed for features an overconfident, arrogant, fictional AI system, called PAMiLa, who believes its intelligence far outstrips that of a simple human. That this fictional intelligence might occasionally be unable to compose a simple transition—regardless of confidently suggesting that it can—adds a human flaw to the artificial character. Second, as I know it is only my perception of certain transitions as ‘successful’ or otherwise that makes them thus, I do not expect my view of success to be the same as any other human observer. It is possible, and probable, that there is a person who perceives every transition that GMGEn makes to be a success just as there is the opposite person who perceives every transition is a failure. These observer cases would still exist even if I were the former individual. Coupled with my contention that artistic beauty comes from perceived perfections within an imperfect whole, or rather the order (success) that is found from disorder (failure or otherwise), leaves me satisfied sitting in the position I am: perceiving some of GMGEn’s transitions as failed, which I would never dream of composing myself, and some as successes, which I could never have thought of myself.

The work for generative narrative, which is found in the InteractivePortfolio_Application, directly mimics the scenario found in an open world game such as The Legend of Zelda.[32] This piece takes pre-composed segments of a narrative and combines them together in a non-linear string. Each of these narratives is assigned a musical personality from GMGEn to accompany it. When a narrative segment is complete a new one is selected and the musical personality transition begins in GMGEn. It is simple to map the sentences of the narrative onto a virtual 3D game world and to see the benefit that an engine skilled at creating transitional material brings to game music scenarios. A working version of GMGEn, with ten personalities is available for the reader to explore within the Demonstrations_Application.[33]

[1] D Cope, ‘An Expert System for Computer-assisted Composition’, in Computer Music Journal, vol. 11, no. 4, 1987, p. 30.

[2] K Muscutt and D Cope, ‘Composing with Algorithms: An Interview with David Cope’, in Computer Music Journal, vol. 31, no.3, 2007, p. 18.

[3] D Cope, ‘Facing the Music: Perspectives on Machine-Composed Music’, in Leonardo Music Journal, vol. 9, 1999, pp. 81.

[4] A Eigenfeldt ‘Real-time Composition or Computer Improvisation? A composer’s search for intelligent tools in interactive computer music’, in Electroacoustic Studies Network, 2007.

[5] A Farnell. ‘An introduction to procedural audio and its application in computer games’, in Obewannabe, 2007. viewed 30th October 2013, http://obiwannabe.co.uk/html/papers/proc-audio/proc-audio.pdf

[6] A Berndt & K Hartmann. ‘Strategies for Narrative and Adaptive Game Scoring’, in Audio Mostly, 2007, viewed 19th February 2014, http://wwwpub.zih.tu-dresden.de/~aberndt/publications/audioMostly07.pdf

[7] T Winkler, ‘Defining relationships between computers and performers’, in Composing Interactive Music: Techniques and Ideas Using Max, MIT Press, 1999, pp.5-6.

[8] J Dowling, ‘Tonal structure and children’s early learning of music’, in Generative Processes in Music, J. A. Sloboda (ed.), Oxford University Press, Oxford, 1988, p. 152.

[9] Kaae, pp. 77-78.

[10] G. E. Lewis, ‘Interacting with Latter-Day Musical Automata’, in Contemporary Music Review, vol. 18, no. 3, 1999, pp. 99-112.

[11] Winkler, ‘Defining relationships between computers and performers’, p. 28.

[12] C Bodman Rae, The Music Of Lutoslawski, Omnibus Press, New York, 1999, pp. 75-76.

[13] Dowling, p. 151.

[14] G Cooper and L. B. Meyer, The Rhythmic Structure of Music, Phoenix Books, University of Chicago Press, 1963.

[15] Cooper and Meyer, pp. 1-11.

[16] Cooper and Meyer, p. 4.

[17] See ‘MUSIC SYSTEMS’ tab in InteractivePortfolio_Application. Click any ‘Generate’ button to generate a new work.

[18] See Tab 3 in Demonstrations_Application and select ‘Full Random’ from the drop down menu.

[19] See Tab 3 in Demonstrations_Application and select ‘Full Step’ from the drop down menu.

[20] See Tab 3 in Demonstrations_Application and select ‘Computer Hybrid’ from the drop down menu.

[21] See Tab 3 in Demonstrations_Application and select ‘Human Hybrid’ from the drop down menu.

[22] Oxford English Dictionary definition, viewed 30th October 2013.

[23] See Tab 4 in Demonstrations_Application and explore the drop down menus.

[24] See Tab 4 and select 3, or more, lines from the first drop down menu.

[25] Winkler, ‘Strategies for Interaction: Computer Music, Performance, and Multimedia’, p. 2.

[26] Rae, pp. 79.

[27] D Cope, ‘One Approach to musical intelligence’, in IEEE Intelligent Systems, vol 14, no. 3, 1999, pp. 21-25.

[28] Clarke, p. 34.

[29] Lewis, p. 105.

[30] Lewis, p. 105.

[31] See Tab 5 in Demonstrations_Application.

[32] See ‘NARRATIVE SYSTEMS’ tab in the InteractivePortfolio_Application.

[33] Tab 5 within the Demonstrations_Application; click any personality and wait a few moments. The user is also able to regenerate the memories on-the-fly showing a new version of all personalities.