Introduction

The beginning of this appendix will discuss general concepts surrounding the varied material submitted as part of this DPhil. First I will discuss how this variety brings strength to this thesis. I will then discuss the impetus for submitting a body of such mixed works and the justification for the inclusion of both notated and digital interactive or generative/automatic works. I will then discuss how the path of my critical writing lead to the exploration of the later digital works and how they act as experimentations into this exploration. Finally this appendix will discuss how these research interests pertain to each piece, how they relate to aesthetic and technical ideas and what questions may emerge from this discussion.

On a first glance of the body of work constituting this thesis, the reader could be forgiven for thinking the materials do not participate towards a combined whole. This body of work combines instrumental music, digital music, interactive music, generative/automatic music, classical music, popular music, popular media, scientific analysis, and musicological analysis. Without both my childhood (and adulthood) investment in the video-game art form and my paralleled musical training, I may not have had the capacity to activate any critical response to the current state of video-game music’s reactivity for which the critical writing of this thesis explores. Further too, without my classical training and my digital interests, I would have been lacking possession of the full technical framework required to knit the classical composer to the computer programmer in an effort to obtain any answer existing between these two disciplines. This generalist perspective also affords a frame of reference that can be difficult to maintain over the course of nearly half a decade’s work. It is on these grounds that I believe the breadth of this thesis to be its greatest strength. This thesis contributes not only to game studies (a youthful field in rapid expansion), but also to contemporary popular music, video-game music and theory, classical music and touches spaces in-between.

With this said, the combining factor is simple: this work came from a single mind over a period of nearly half a decade. Change was inevitable. The change of musical style and musical interests is inline with my exploration into the answers required of the critical writing.

From a time just prior to the beginning of this project, I had begun to feel limitations in the traditional notated score. I was increasingly becoming unable to efficiently communicate the real sounds I wished performers to produce simply through score. I was not comfortable using descriptive methods as this would usually end up taking the form of a sentence of words, or even brief paragraph, on the opening page of the score, which, in an admittedly small sample of experience, was often not read by the time-constrained performer. Similarly any non-standard prescriptive notation would confuse performers to a point where, I found, their enthusiasm for the work diminished. While it is likely I had interacted with an unrepresentative sample group of performers I found this suggestive that the score might be a fundamental tool in this miscommunication. I could see an argument that, for traditionally trained western musicians, the score enforces limitations on pitch, time, direct interactivity for the non-performer, and could decreases interest over multiple hearings. This last point—and to an extent the second to last—is of course excluded for music with improvised content. Further, none of these statements are attributable to all musics in any sense. These comments are simply my generalised thoughts at the time, and are those which gave rise to my changing musical focuses seen throughout the portfolio. Explorations of the limits of the score can be seen in the earliest two works in this portfolio.

In Traversing the Centuries, notational limitations existed where I needed to write explanatory notes in the preface to describe particular sounds. I have been very pleased with all performances of this work as the singers and pianist have often given appropriate time to finding an interpretation aligned with my own. The same cannot be said of my experience with Abiogenesis, which consisted of a rushed sight-read workshop. As many of the parts contained quarter-tones, this provided an unreachable point for performers seeing the score for the first time. From communicating with professional classical performers I’m aware that it is not common practice to include training for on-the-spot sight reading of non-standard pitches such as quarter-tones. In these instances performers would often practise these specific moments thoroughly before a particular rehearsal. That my quarter-tone-ridden score could not be performed by sight was incompatible with the standard way that the majority of classical music is currently performed. This therefore dissuaded me from writing for this medium. At the time I felt that the sight-limit of classical performance confines composers to producing works limited to standard notation. This is in no way a ubiquitous issue and is limited to those events where performers are simply not afforded appropriate time to devote to a new work. Therefore, I sought other forms of music making not contingent on this observation.

During the same early period of study I had begun learning to produce applications with MaxMSP. With a basic technical understanding of MaxMSP, I realised how it could be manipulated to explore questions I’d had about active music in video games. It was the exploration of these questions, pertaining to the critical writing, which culminate in the works present in my compositional portfolio submission. The work Deus Est Machina (NARRATIVE SYSTEMS) within the Interactive Portfolio uses the completed generative transitional system (GMGEn) both proposed and built as a working proof for the discussion in the critical writing portion of the submission. The Generative Triptych of Percussive Music (MUSIC SYSTEMS) exists as an exploration of a generative rhythmic engine created as part of the discussion within chapter two of the critical writing. This generative rhythmic engine becomes an integral part of the Game Music Generation Engine (GMGEn). The work Starfields (FLIGHT SYSTEMS) contributes to the thesis in a more general way. While Starfields (FLIGHT SYSTEMS) has a direct contribution to the thesis by way of it being an active experimentation on the branching music work of the first chapter of the critical writing, it too uses techniques garnered later, during my research of the second and third chapters. In particular, the technique of Harmonic-clouds, generative melodies and rhythms are both used within this work. In essence, Starfields (FLIGHT SYSTEMS) is a work that incorporates more of the thesis than any other piece yet in a much more canvassed, rather and specific, way. While still under the correct description of ‘interactive experience’, Starfields (FLIGHT SYSTEMS) is the closest to a ‘video game’ that any of the works submitted become. Starfields (FLIGHT SYSTEMS) contribution to the portfolio is twofold as it is not only a work directly relating to the research interests of the three chapters of the critical writing, but further its inclusion affords the portfolio a more experimentational overview of interactivity, which the standard written-only thesis may not allow.

In summary, the thesis combines my compositional movements and experimentations into different methods of music making within the classical music sphere. It spans the period of time where I began to embrace technology in my works, while still maintaining techniques gained from writing notated works earlier in my portfolio. Traversing the Centuries shows an attempt at temporally shifting music within a work of static composition (static here defined as antithesis of dynamic – i.e. music with a relatively constructed fixed temporal length): the music of one section gets repeated and spread across a greater extent of time as the work unfolds. Abiogenesis contains the seed of my ideas about harmonic-clouds and their treatment to aid in a dynamic music. Starfields (FLIGHT SYSTEMS) and the Generative Triptych of Percussive Music (MUSIC SYSTEMS) combine and explore techniques directly incorporated in the Game Music Generation Engine (GMGEn) (discussed in chapter 1 and 2 of the critical writing) prior to its completion. Deus Est Machina (NARRATIVE SYSTEMS) uses GMGEn as its musical production. This work is completely generated based on the work put forward in the body of critical writing.

The rest of this appendix will discuss the research interests relating to each work submitted as part of the portfolio, how they relate to aesthetic and technical ideas and what questions may emerge from this discussion. As a chronological order highlights the gradual explorations and discoveries most readily, this order will be used in the questioning of the works submitted. I will therefore begin from the earliest work written and end with the most recent.

Traversing the Centuries

In this work I attempted to explore the different viewpoints from which the text could be read. The text discusses a view of historic construction from descendants’ point of reference, perhaps suggesting a present existing in a ‘now’ time. However, the text also firmly acknowledges that a present ‘now’ existed in the past for our ancestors, though it lies obscured from the direct view of their descendants. The text suggests that although this history may lie obscured that it still exerts a direct influence on our lives, whether we understand or accept this influence. The striking metaphor used by Anthony Goldhawk to shed light on the histories of our ancestors is that of human excavation or natural erosion.

I intended to blend the discovery of the ancestral histories and the present world together throughout the work, in essence gradating between the narrative of the present and the narrative of the past. As the text was visually presented in four couplets separated by a paragraph, these appeared as sedimentary layers, evoking the themes of excavation and erosion. Therefore I chose to build a work where the musical framework repeated four times. The piece therefore has a varied strophic form and becomes more complex as we move towards the close. Each time this framework was to be repeated more and more music would be discovered ‘between’ the notes that existed in the previous section. Therefore, the work becomes more complex during the course of the piece and highlights the viewpoint (or narrative) from the present perspective at the opening (with notes obscured from audibility), and the viewpoint from the past perspective at the close (with all of the notes fully excavated). In other words, the detail of the piece is therefore excavated or eroded to the surface much like the poem suggests. A musical motif I intended to bring out was an accelerating crescendo which in this piece trills, mostly, on the interval of a third and is cross threaded and layered in the piano lines, also occasionally in the vocal line, throughout the piece. Further to adding more pitches and augmenting gestures in the musical lines, another technique was used in the vocal line where I augmented the syllables into sub-syllabic components. For example the single syllabled word ‘flesh’ becomes a two-syllabled word ‘fle-sh’ in the music. In this example, the second syllable is achieved by blowing air through the teeth as a ‘sh’ sound.

While I feel the score communicates the ideas of the music well, one memory stands as contrast: the final word in the vocal part is intended to suggest a ghostly whisper from the ancestors spoken directly through the final sub-syllable of the narratively present singer. No performer of this work has correctly identified a vowel notated with a crossed note-head as identifying a whispered note. It seems pedantic to take issue here as my solution was simply to add the word ‘whispered’ above this note; however, I saw this solution as more of a ‘work around’ than an elegant answer to the miscommunication of my score.

This work was a commission from a close friend who required the particular instrumentation used. Having already begun working with MaxMSP at the time I had begun to think of ways in which the exploration of the multiple narratives of past and present could work from an interactive perspective. The example I had thought of at the time was that of a work where two pieces of music happened simultaneously but could not be heard simultaneously. In other words, one ‘side’ of the music could be switched off or on via some interactive trigger point. In this hypothetical piece the musics would represent, first, the narrative from the past’s perspective, and second, the narrative from the present perspective. Taking the feature of contrast I used in this work—that of the obfuscation of musical detail—the two musical works would be identical in temporality. The music attaching to the detailed past of the ancestors would be rich and complex from start to finish. The music attaching to the obfuscated view from the present of the speaker of the poem, the descendant, would be the same version with many notes missing. An interactive element would be added where the user could switch between the musical layers and hear the full texture, thus interactively dipping into, or out of, the musics of the ancestors’ (past) or the descendants’ (present). The idea put forward here and thought up while working on Traversing the Centuries would eventually feature in the music of Starfields (FLIGHT SYSTEMS) during the first MiniGame played in the first 5 minutes of the piece. In Starfields (FLIGHT SYSTEMS) two musical textures are played that contrast in rhythm and timbre but coalesce in harmony and textural complexity. While the version of this idea presented in Starfields (FLIGHT SYSTEMS) is different from the fully flowing multi-work I’d hypothesized when writing Traversing the Centuries they both have the same root.

As a work under the contemporary classical umbrella my experience with this work has been very positive. I’ve had a strong relationship with the singers and pianists who have performed the work to date. All performers involved have given generous levels of attention to master the challenges of the work. For this reason, in a large respect, this work acts almost as a scientific control against the rest of the portfolio. It stands at a point before I’d fully realised my interest and affinity for digital and interactive compositional methods. It was these fresh-seeming ideas of digital interaction, inspired through MaxMSP and the creation of this work that led me to explore these avenues further during the rest of my portfolio. My issues with notation further gave me impetus to move to working in different media, at least for a time. In summary, while this work is firmly in the domain of the non-dynamic/non-interactive, it provided the material on which my newfound digital outlook could express new creative output.

Abiogenesis

The narrative idea I’d had for Abiogenesis featured a creature emerging for the first time from its primordial state of non-living to become living. The chance to work with orchestral forces for Abiogenesis allowed me to experiment with a new method of composing. Prior to this work I’d spent a lot of my time composing at the piano to discover the pitches and harmonies I wished to use in my works. While I enjoyed the audible feedback given by the piano, for me, this came with the drawback of creating moments of inconsistencies on the page that did not match the ‘score’ in my head. My explanation for this is that in my exploration of many different versions of a particular musical moment, while composing at the piano, my psychological reaction to eventually having to choose only one of these moments was ultimately unsatisfying and thus lead to the disparity of the real single-score with my imagined multi-score. I would call these moments ‘fluxing’ as they never seemed to have completely phased into the score of the real. While an odd psychological phenomenon I’m still aware of it when composing at the piano to this day. For Abiogenesis I intended to completely remove the piano from my method and instead focus on just the creation of the real score.

As I was no longer bound to the piano to gain my harmonic language I instead used the technique of selecting pitch-class sets for various sections and subsections of the work. I found I naturally aimed towards this harmonic technique to consolidate harmonic consistency across small-scale events within the work. I used the overarching narrative of the work to govern my use of texture and horizontal movement; thus, I generally used the orchestra as one gestural sounding body near the opening and split the orchestra into constituent families further through the work. For me this was intended to represent the creature becoming more than just a single mass of non-complexity, and instead evolving to a complex multiply-organed being capable of breath and pulse.

At the time of writing this work I had been experimenting with creating generative harmonic consistency within a computer program. I had designed a small program that would randomly generate notes in a single musical line one after another at constrained random time intervals. Combining two or more of these generating musical-lines created harmonic interest. The question that arose was how to create a harmonic consistency between two or more randomly generating musical lines. The full discussion can be found in chapter 2 of the accompanying critical writing; however, my conclusion was to provide the computer with specific predesigned pitch-class sets which force the generative engine’s decisions to be that of only concurrently pleasing options. I term these moments of potential harmony harmonic-clouds. These moments are macro-predictable but micro-indeterminate. This digital method of generative composition has similarity to the methods used to choose harmony and gesture in Abiogenesis.

With this new method of composition I succeeded in creating a work that I considered non-fluxing, based on the description of that term above. While this success may have brought me a sense of comfort in a new compositional method that allowed my music to be set by the score, this instead triggered the opposite response. I felt uncomfortable with the fact that some of the musical moments I’d discovered while composing at the piano were now being lost as the score required me to choose only one. Abiogenesis was therefore my final work of static (as opposed to reactive or dynamic) music that I wished to submit for this portfolio.

Generative Triptych of Percussive Music (MUSIC SYSTEMS)

The Generative Triptych of Percussive Music (MUSIC SYSTEMS) is an exploration of constrained randomness when dealing with structure on different levels of organisation. In the critical writing portion of this thesis I discuss how micro-level randomness can be grouped into larger sections with defined structure. This work acts as both a proof of concept for this idea and further as an exploration of the instrument I created to demonstrate this concept.

I used the ChucK coding language, developed for Princeton Laptop Orchestra (PLOrK), to design a class (a self contained object of code) which had a ‘play’ method (an executable block of code with a specific function). When triggered, the play method would create a single bar of generated percussive music based specifically on parameters chosen by the composer. The parameters the composer could pass to the method included: number of beats, number of times the bar is repeated and the tempo of the bar. These main parameters governed the general attributes of the bar. Further to these parameters a set of ‘chance’ parameters existed for each percussive instrument. To create a work out of this functionality I simply had to run consecutive calls to the play method. Each call would have a specifically composed set of parameters designed to allow a specific bar to fulfill a purpose in the greater whole of the piece. The three pieces in the Generative Triptych explore different avenues for working with this same play method. The mechanics that govern the ‘chance’ parameter are explained fully in both the text and video programme notes for this work.

The focus of research around this work was threefold. First, I wished to prove that composing at the bar level, in a digitally generative work, is directly relatable to the equivalent level in a notated work when employing constrained randomness. This was the reason for titling each piece as ‘Sonata’, owing to its status as one of the pinnacles (or pitfalls, depending on your perspective) of structure in the Western classical tradition. Constrained randomness established a predictability at the bar level that allowed for structured composition from above the bar level. Second, was to design a working method for generative rhythm, which I would later include in the Game Music Generation Engine (GMGEn) for the critical writing. As has been mentioned above, GMGEn runs the music for Deus Est Machina (NARRATIVE SYSTEMS). Third follows on from the discomfort with having to decide on particular versions of musical moments to go into a static work. This work was the first I’d created that resulted in multiple recognisable works of distinct music from the same score.

I believe this work is successful in creating variety over multiple playsthroughs while maintaining the essence making each composed work its own. Therefore multiple playthroughs become a feature of the work. It’s important to note that it was not the intent, from my point of view with this work or any others in this portfolio, to create works of infinite interest. The term ‘multiple playthroughs’ here refers to an equivalent interest that might be achieved from multiple recordings of an acoustic work – greater than zero and yet non-infinite. In other words, though the number of variations a single score of these sonatas could produce is very large I do not see a listener’s interest extending to this number of iterations. This is a psychological feature bound by humans’ innate skill at pattern recognition. More research would be required to find a point at which these works no longer excite due to this biological feature.

Starfields (FLIGHT SYSTEMS)

The intent of this work was to create art music incorporating player control. This is a feature often used to varying degrees of extent and success in game audio. More personally, I wished to explore player-controlled music, in this case controlling the intensity of the music. The aim of this work was to put the listener in the position of bending some of the musical output of the piece. The player does not drastically change the overarching course of the piece, which remains largely fixed. As an extension of the transitional engines discussed in chapters one and two, Starfields sits at a point between the evolution of the first two chapters and the third. While chapter three of the critical writing discusses a hybrid engine of the first two chapters, Starfields is not an intermittent music engine. Starfields is a work of interactive dynamic music. The narrative of the work involves the player actively competing with the artificial intelligence PAMiLa, the antagonist of the digital portfolio meta-work.

A single slider on the right hand side of the work is used to explore the majority of the musical control the player has. Moving the slider up or down will at some moments increase or decrease the foreground texture, increase or decrease the background texture, trigger pitches on a digital harp, time-shift the work, among others. During the narrative take-over of the ships systems by the corrupted AI the player will also experience a loss of control, which I used in juxtaposition to the other forms of more direct control. The methods used to create this work are detailed more fully in the accompanying text and video programme notes.

Before completion of the work I ran a couple of user acceptance testing (UAT) sessions with friends and family. Several points were raised on obtaining user feedback. Two particular comments emerged which encouraged me to make direct changes to the work. In particular was the variety with which some players accepted the shield meter. When the shield meter falls below certain thresholds the heads up display flashes a warning and sounds an alarm to show that the shields need to be replenished. Most users commented that the REPLENISH SHIELDS button needed to be pressed too often. As a result I changed the shield decay rate and also the amount replenished by a single click; in other words, drastically decreasing the needed clicks of the REPLENISH SHIELDS button. I also made available a typed command that was previously hidden allowing the user to activate an ‘auto-shield’ feature removing the need for micromanagement of the shields altogether. Another interesting point regarding the shields was the fact that it appeared to split the demographic of players by age. Older players were very nervous about allowing the shields to deplete fully. This was seen, by these players, as a ‘losing’ condition and was therefore to be avoided. In these players the shields became a kernel of negative focus that I did not intend (and resulting in the change mentioned above). In contrast to this younger players saw the depletion of the shield meter as their first method of exploration. These players sought the answer to the question of what would happen if they take no action to seemingly preserve their avatar’s life by replenishing the shields. This is a typical phenomenon in video gaming where players will push the boundaries of their avatar’s mortality to enable a greater grasp of the rules of the game. In effect they force a lose condition to find out exactly where that lose condition stands in relation to the general universe of the game. I believe that this was the same phenomenon I was witnessing during the user acceptance testing.

The other issue that emerged later was in response to the visuals of the user interface. One commenter said they were “expecting Grand Theft Auto”. It is important to note that at the time of release Grand Theft Auto V (Rockstar Games 2013) was the most expensive game ever produced with a budget around 270 million USD. While I’m unsure as to the intent of the commenter’s words I felt this was an unfair comparison to make. However, this comment made me aware that if visuals exist at all then there will be a subset of players who expect extremely high standards from the visual content. The question taken from this is as to whether visuals built from MaxMSP can perform the visual role adequately. Many modern games have a ‘retro’ aesthetic and are just as popular as other AAA standard games with photo-realistic graphics. Minecraft (Mojang 2009) is an example illustrating this. The block-like, procedurally generated world of Minecraft does not seek to be photo-realistic to any arguable extent. The creator of Minecraft, Markus ‘Notch’ Persson, finds that the pursuit of graphical realism limits potential innovation achievable by games designers. The same conclusion can be drawn by looking at the styalised world of The Legend of Zelda: Wind Waker. This styalisation allowed the Legend of Zelda franchise to compete with competitors employing vastly superior graphically capable hardware, which was not available on Nintendo’s Game Cube console. This entry in the Legend of Zelda franchise also visually appears less dated a decade on than its immediate successor The Legend of Zelda: Twilight Princess lending further support for styalisation. As realism isn’t a graphical or artistic style, game visuals attempting to mimic reality will inevitably appear dated with the advance of visual rendering technology. It was this argument that grounded my decision towards styalisation over realism in the visuals used in Starfields. Further, this choice aligned with the technical, financial and temporal resources available to me.

This piece is the pinnacle of my working knowledge of MaxMSP at the time of submission on 16th May 2014. It went through a great deal of optimization to get it to run on my system with the degree of consistency it has now. This cannot compare to the normal quality assurance (QA) a software product with a dedicated studio team would receive in the commercial world and should be treated as thus; however, it is understandable that experiencing a piece of software in development can appear noticeably unfinished or unpolished when compared to software backed by the resources of a large technology company. This is owing to the fact that high levels of technological refinement have been normalised by today’s technical society. Immersion into the interface was less problematic for users comfortable with gaming interfaces. The same is said for those users who are used to the everyday hacking necessities of digital laptop performance, of which this piece would be counted as a member.

Deus Est Machina (NARRATIVE SYSTEMS)

Deus Est Machina holds a twofold purpose within this portfolio. It exists as a proof of concept for the technical architecture set out for a generative transitioning system in the body of critical writing. It also exists as an compositional exploration of the instrument created. As this instrument was designed to generate music for indeterminately changing and indeterminately temporal music I designed a narrative work mimicking these scenarios, which also closely reflects the situation for which the instrument is functionally designed. Narrative ‘areas’ or ‘zones’ are set up within the personality structure of the music. These have been composed within the GMGEn instrument and amount to a detailed configuration of the way each of the personalities creates music. The logic built into GMGEn allows these zones of music (which I term musical personalities in the critical writing) to be transitioned to, or from, in a relatively smooth fashion. While the piece needed to incorporate all of these elements it also needed to fit into the global meta-narrative of the portfolio of compositions. The way I chose to knit these two scales together was have PAMiLa, the fictional AI, narrate the story to the listener. I wanted this piece to be more about the character of PAMiLa than of the actual narrative PAMiLa produced. The idea was to show that the personality of the artificial intelligence was more complex than one may superficially think, to the extent that PAMiLa presents human characteristics. For example, in the tutorial for the portfolio, PAMiLa shows its ‘god’ complex by referring to humans simply as ‘bios’, as well as an arrogant consideration of its own work. The confirmation of this is found in Deus Est Machina (God Is the Machine) work, for which the title was carefully chosen. The computer is in control of your path, god-like, and yet also believes it is god-like compared to a human. This resulted in the first iteration of the work which included a monotone synthesized voice which spoke the text using the basic voice synthesizer found on all commercial computer operating systems and is famously used by the physicist Stephen Hawking.

The work fully utilises GMGEn’s functionality in generating static material and triggered transitional material. I also believe its success at invoking the god in the Machine, which can be read on several levels. In a self-referential way PAMiLa is the titular machine of the story while also being that story’s fictional creator, its ‘god’. This functions within itself (the piece: Deus Est Machina) and without by reinforcing the meta-narrative of PAMiLa as flawed storyteller to the onboard pilot (you, the listener). The original version submitted had the synthesized voice very high in the mix, which was in an attempt to focus the listener on the story. However, this created a larger divide between the music and the locations evoked in the text than was intended. This has resulted in the change to this work now submitted.

The major change I made to this piece was inspired directly from the video games of my youth. Before voice synthesis and voice acting was common in video games the music would play alongside text that would be read by the player. As GMGEn’s genesis was inspired by video games from this era, it seemed to invite a natural solution to the problems presented by the inclusion of voice synthesis. In the current version of the work I have stripped the voice away and left the music play over unread text. This text can now be read, or reread, by the user at any speed. This creates a better atmosphere and greater temporal space in which the musical generation of GMGEn can thrive. It further removes the layer of separation previously acting as a barrier to the immersion of the listener. I also added more controls so that the listener can choose which locations to explore in what is now an interactive text adventure instead of a story told, and controlled, wholly by PAMiLa. PAMiLa remains in control of the protagonist’s fate and for what that character is trying to achieve on their quest; however, relinquishing some of PAMiLa’s influence over Deus Est Machina in this version of the work created a stronger and more interactive piece that exploits GMGEn further than the original version did.

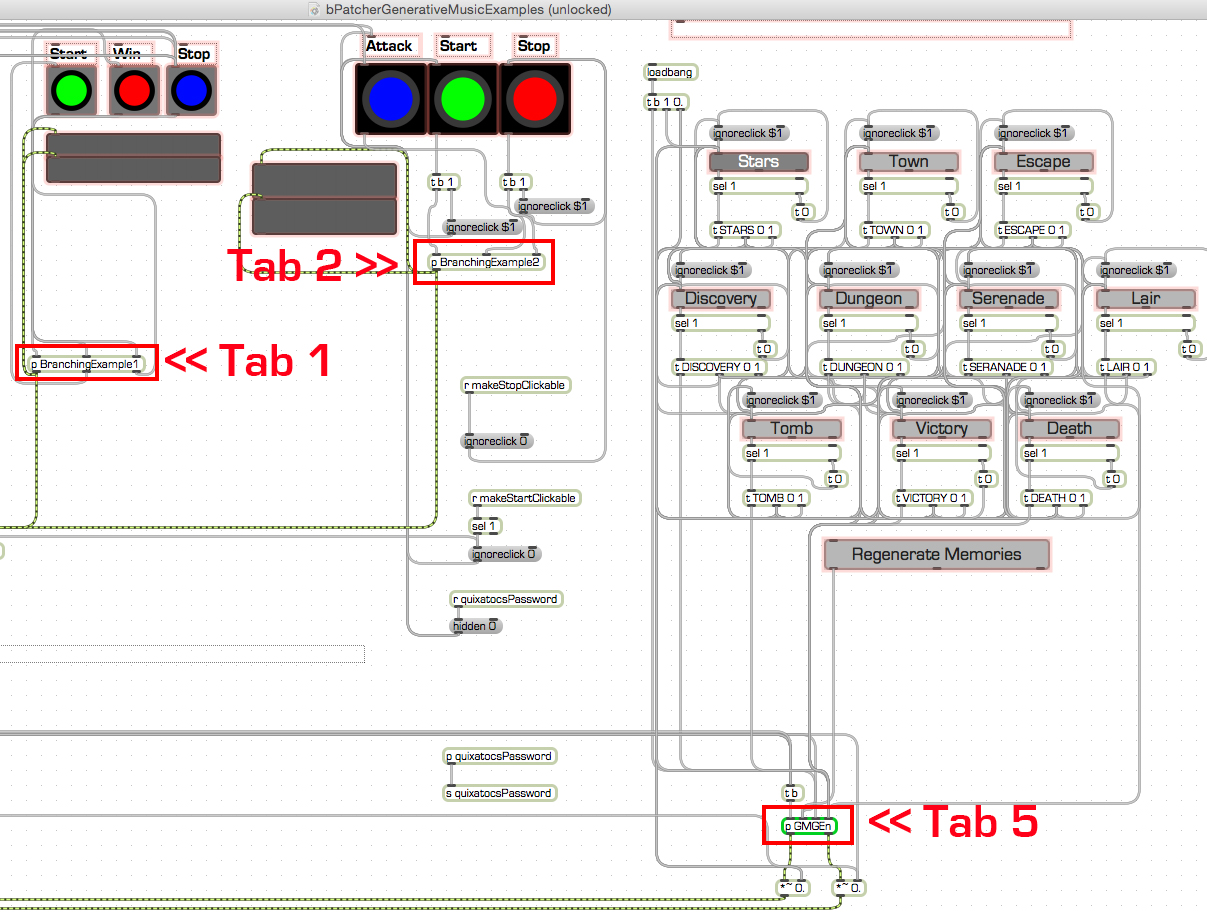

This appendix highlights the technical solutions I found to handling the musical transitions in response to a trigger. In the Demonstrations_Application Tabs requested by the examiners (1, 2 and 5) these triggers are all made by the click of a mouse on a particular interface button. This appendix will discuss the MaxMSP patches for each Tab (1, 2 and 5) in separate sections. As the MaxMSP patches (particularly for Tab 5) are complex, I have designed this document to explain and annotate significant points both from a technical and practical perspective. While my images and explanations give the full information required by the examiners, I have also added the location of these screenshots to the example titles should the examiners wish to explore these patches further. In the paper (physical) copy of this appendix all images will be grouped together at the end of the main body of text. On the web copy of this appendix the images will appear in line with the text.

The unlockable version of this MaxProject can be found here by downloading the .zip file and extracting the contained folders.

Once extracted, open the .maxproj file titled DemoPatches.maxproj in MaxMSP 6. This folder hierarchy needs to be maintained for Max to access all data correctly.

The patch logic that does all of the heavy work for tabs 1, 2 and 5 is contained in the small subpatches labeled in Demonstrations_Application Figure 1 below. This level of sub patch is not important to this discussion and merely shows the location of the bulk of the working logic in relation to the points being made by my critical writing; therefore, this level of zoom was used and the full patcher, which deals with other logic external to that requested by the examiners, has been omitted from this figure. The reader is advised to follow along with the unlockable version of the patch if further detail is required.

Demonstrations_Application Figure 1 – Top-level patcher of the bPatcherGenerativeMusicExamples.maxpat file

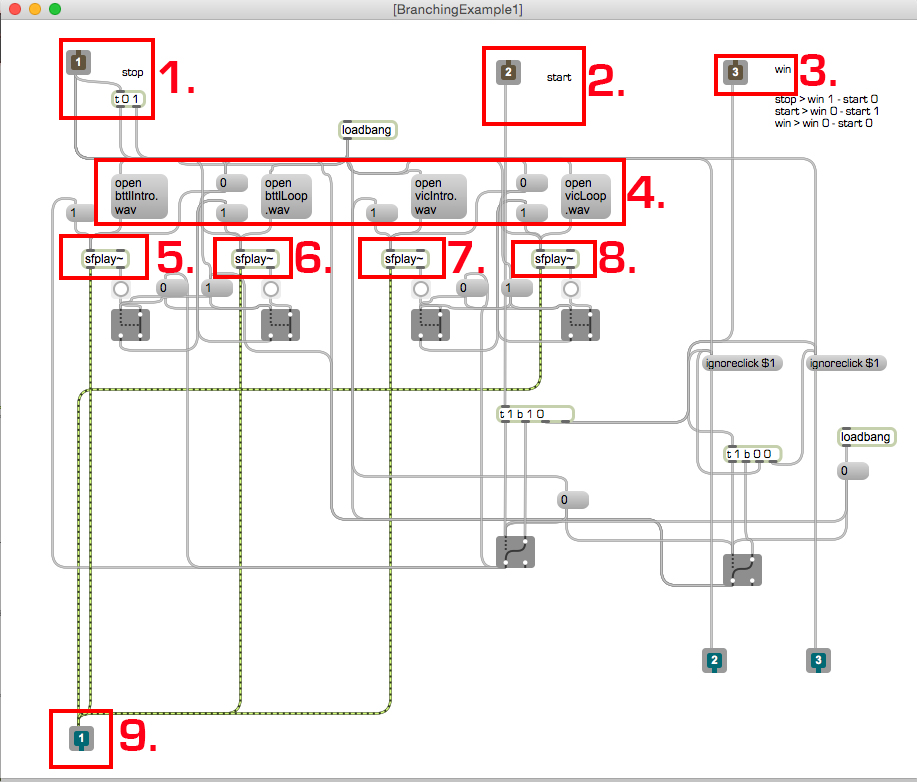

Tab 1 – Branching Example 1 Subpatch

This subpatch deals with the work logic for Tab 1, which plays an introduction to the battle music when the START button is pressed. After a few seconds this introduction music moves into the main looping phase of the battle music. This looping phase continues until the WIN button is pressed. Upon pressing the WIN button the patch will begin an introduction to the victory music. After a few seconds this victory introduction music will move into the main looping phase of the victory music. This will then continue until the STOP button is pressed. Note: the STOP button can be pressed to halt the music during either of the looping phases. The subpatcher for this logic is displayed in Demonstrations_Application Figure 2 below. This is conceptually explained further in chapter 1 of the critical writing.

Demonstrations_Application Figure 2 – Found in the BranchingExample1 subpatcher in the bPatcherGenerativeMusicExamples.maxpat file top-level patcher.

Inlets in annotation 1, 2 and 3 take the input from the START, WIN, and STOP buttons from the top-level patcher and pass this into the logic below. When the patch is loaded, the messages contained in annotation 4 are passed to the sfplay~ objects in annotation 5, 6, 7, and 8. These sfplay~ objects pass all of their sound out of the outlet in annotation 9, which is linked to the digital to analogue converter (dac) above in the top-level patcher. When a STOP message comes in, the patcher is set to its initial state. This means that all gates are set to their starting positions. When a START message comes in, the sfplay~ object in annotation 5 is triggered to start playing the bttleIntro.wav file. Once this object has finished playing, it sends a trigger out of its right outlet that starts the next sfplay~ object in annotation 6. This therefore plays the bttlLoop.wav. When this sfplay~ object finishes playing it triggers an output from its right outlet. If the WIN button has not been pressed this will just trigger itself, therefore playing another loop of the bttlLoop.wav file. However, if the WIN button has been pressed the sfplay~ object in annotation 6 will be sent a stop message and the sfplay~ object in annotation 7 will be sent a start message and the vicIntro.wav sound file will play. Once the WIN button has been pressed the play logic that has been described here continues but instead of running though the sfplay~ objects in annotation 5 and 6 the messages will run for the sfplay~ objects in annotation 7 and 8. When the user hits STOP, all soundfiles will be sent a stop message and all gates will return to their original position. Other logic that is not included in this discussion pertains to stopping the user from being able to press START twice in a row, which would result in too many ‘on’ messages getting sent through the system.

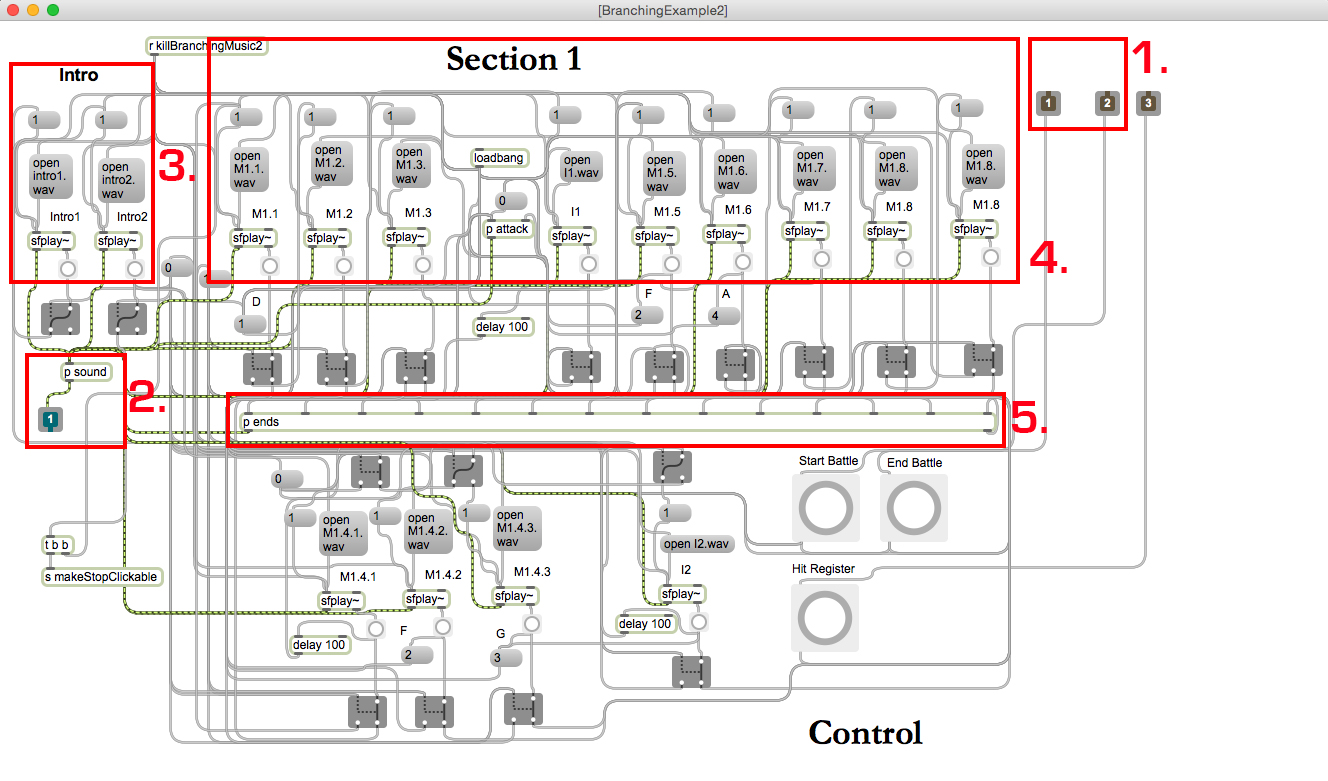

Tab 2 – Branching Example 2 Subpatch

This subpatch deals with the work logic for Tab 2, which plays a pixelated version of a piece resembling music from the Final Fantasy series. Separating the music into individual pixels affords the program the knowledge of its progress through the work. This can then be used for dynamically reactive purposes in video games. This patch is a working version of a branching music engine, which shows that from any point in the main body of music, an appropriate ending music can be selected. This ending was composed to aesthetically complement the exact bar in the music where the ‘win’ switch was made and therefore where the win condition was achieved by the player of the game. This results in a complementing artistic convergence of the change in game-state with an aesthetically consistent change in musical-state.

Demonstrations_Application Figure 3 – Found in the BranchingExample2 subpatcher in the bPatcherGenerativeMusicExamples.maxpat file top-level patcher.

Again in this patch, the input is taken from the main top-level patcher through the inlets in annotation 1 (See Figure 3), passed through the system and results in signal output which is sent out of the outlet in annotation 2.

Upon pressing the START button the intro sfplay~ objects in annotation 3 will be triggered in sequence and will play pixel 1 and 2 of the introduction music (intro1.wav and intro2.wav). Once this is complete the final sfplay~ object of the section will send a start signal to the first sfplay~ object in the main looping section of the work. sfplay~ objects making up this section can be found in annotation 4. These sfplay~ objects are triggered one-by-one in sequence from the left side of the patch to the right until the final one in the sequence is played. When the final sfplay~ object in this section is finished playing the first sfplay~ object in this section will be retriggered and the cycle will continue again. Coupled with the start of each of these musical pixels is the opening of individual gates linking each individual pixel with the appropriate ending found in the ‘ends’ subpatch in annotation 5. When the STOP trigger is hit by the user the gate leading to the correct ending will be open and therefore the next sfplay~ object to be given a start message will be the sfplay~ object loaded with an ending sound file compositionally linked to the previously playing musical pixel. Other logic that is not included in this discussion pertains to stopping the user from being able to press START twice in a row, which would result in too many on messages getting sent through the system.

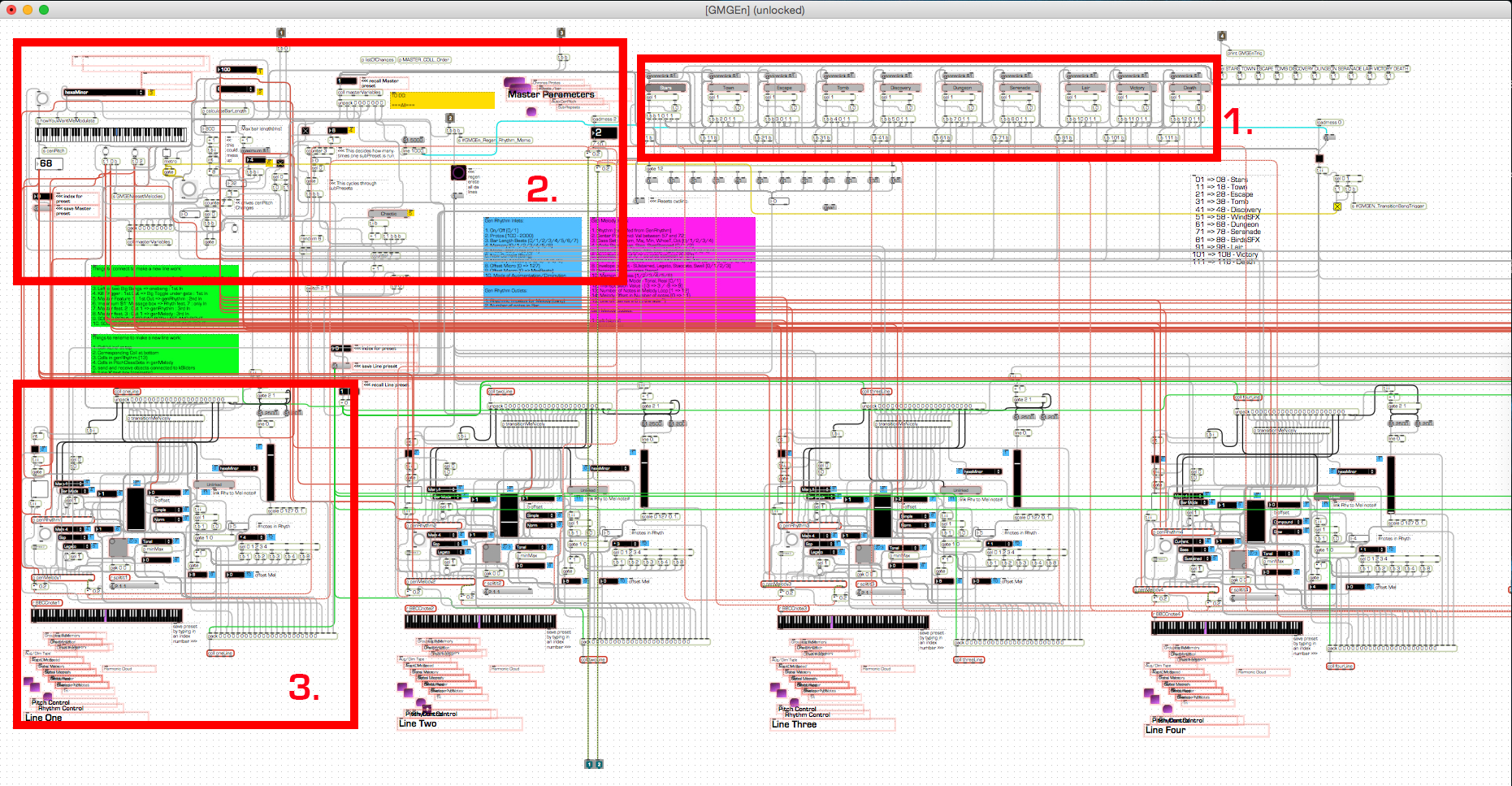

Tab 5 – Game Music Generation Engine (GMGEn)

This subpatch deals with the work logic for Tab 5. This tab houses a scaled down user interface that acts as an interactive method mimicking the kind of transitional triggers that may occur in a video game scenario. At a high level, upon clicking one of the buttons corresponding to a musical personality, the system will play music according to preconfigured (composed) rules that align with that particular personality. This state will cycle through some further preconfigured logic as part of the same personality until a new state is triggered by the user. When a new state is triggered, a transitional period of music occurs. During this transitional period, musical elements of the first are switched to the role they will perform in the new musical personality. As this happens in a semi random way for each musical element, and further that this happens asynchronously for each element, the effect is that of a gradual stylistic shift from the old personality to the new. Each musical personality can therefore be attached to a specific game state and can be used for dynamically reactive purposes. This patch is a working version of a generative state-based musical engine that is capable of transitioning between different pre configured states given a single trigger. This results in a complementing artistic convergence of the change in game-state with the aesthetically consistent change in musical-state.

As the GMGEn patch logic is complex I will split discussion into three separate subsections. These sections will follow the three highlighted areas in Demonstrations_Application Figure 4 below.

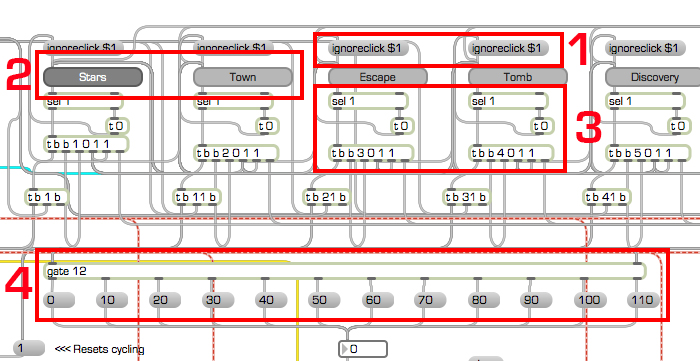

Demonstrations_Application Figure 4 – General overview of the main subpatcher for GMGEn found in the GMGEn subpatcher in bPatcherGenerativeMusicExamples.maxpat file top-level patcher.

At this level of zoom only general areas of the GMGEn patcher can be made out. This screenshot is included to both give an idea of the scale of this patcher and to highlight areas of the patch that are working towards similar purposes. This can roughly be summed to the three areas shown in figure 4. Each area is explained more fully in the sections below. Annotation 1 holds the main personality switches, which trigger a change to a new preconfigured personality. Highlighted in annotation 2 is the logic controlling large-scale features of the musical personality, for example: tempo. Highlighted in annotation 3 is one of 6 musical ‘lines’. There are six identical areas of the GMGEn patch acting as individual lines. A line is the equivalent of a single instrument in an acoustic work. In effect GMGEn is therefore a digital sextet of these musical lines. I will now discuss each of the subsections highlighted by annotations 1 – 3 in figure 4 separately.

Subsection 1

This subsection of the main GMGEn patcher controls the logic that responds to the mouseclick. This sets up the master parameters (discussed below) and the musical lines to receive the correct values, which correspond to the preconfigured musical personalities. This logic is the same for each personality used in the Demonstrations_Application.

Demonstrations_Application Figure 5 – Subsection 1 of the main subpatcher for GMGEn found in the GMGEn subpatcher in bPatcherGenerativeMusicExamples.maxpat file top-level patcher.

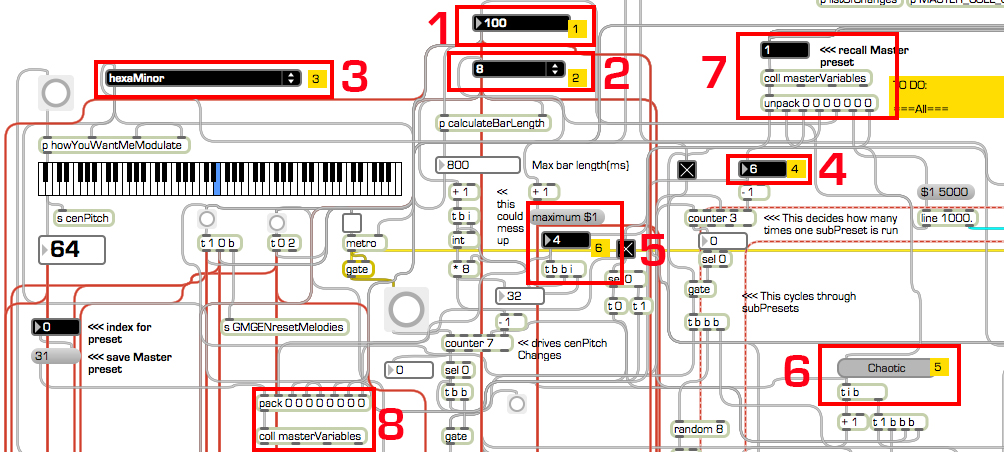

Annotation 1 (See Figure 5) shows the ignore click messages which are sent to buttons in the ‘on’ position to stop them from being clicked off. This functionality needed to be built to accommodate the transitioning feature of the GMGEn system. I designed the system so that only a change to a new personality would signal a tuning ‘off’ of the currently active personality. Annotation 2 shows the individual personality switches. When selected to ‘on’, the trigger object shown in annotation 3, activates the new parameter set for the new personality and passes this to the subsections dealing with the transition phase (this information is passed to both subsection 2 and subsection 3). Annotation 4 shows part of this transfer. Each of the trigger objects in annotation 3 open this gate to a specific value, for example ESCAPE sends a 3 and therefore triggers the ‘20’ value to be passed out of the gate. This ‘20’ value corresponds to a more detailed collection of memory values for the ‘escape’ personality and will be picked up and used to recall these memory values further into the patch logic. This is explained below.

Subsection 2

This subsection of the main GMGEn patcher controls the logic governing high-level features of the current personality. These musical elements are the most important in creating a consistent personality that can affect the listener in a particular way. While these high level parameters are complemented by the lower level features that are shown in the 3rd subsection, the greatest contribution to the style of the personality comes from these high-level (or master) parameters. To configure a personality I needed to save off the settings of the parameters into a collection of data that could be recalled by the program to perform the same logical operations again, and thus generate music with the same feel again. The collection of data that encompasses a single personality is made up of many smaller subsets, which I called subPresets. Each subPreset is a snapshot of the positions of all variables of the program at once. Creating groups of complementing subPresets allows for greater interest within the framework of a single personality. Therefore, in this configuration of GMGEn, each musical personality has 8 subPresets that make up the whole. Musically the function of the subPresets is to provide different musical interest within the same personality. For example, subPreset_1 could be a short portion of a 3-part choral. Using the second subPreset allows the addition of a short portion of a 4-part section to the same choral. Cycling through these different subPresets allows the composition of a multi-sectioned personality affording the personality greater artistic scope and ability to maintain a listener’s aesthetic interest over an enduring period of time.

Demonstrations_Application Figure 6 – Subsection 2 of the main subpatcher for GMGEn found in the GMGEn subpatcher in bPatcherGenerativeMusicExamples.maxpat file top-level patcher.

Annotation 1 and 2 (See Figure 6) show two features governing the bar. The parameter in annotation 1 controls the smallest note duration in milliseconds and the parameter in annotation 2 controls how many beats there are in a bar. These values are used in the 3rd subsection when generating rhythm for and single bar or section of repeated bars. The parameter in annotation 3 governs the way harmonic modulations can occur. These modulations occur at specific intervals governed by the parameter in annotation 5. Shown in this example, the number 4 would here trigger a harmonic modulation to a new ‘tonic’ note after 4 repeats of a phrase. I called this note the Center Pitch as it doesn’t act like a tonic in the traditional sense. In summary, the parameter in annotation 3 governs the constrained random choice of what the next center pitch can be based on this setting.

As was mentioned above, each musical personality is made up of smaller subPresets. These subPresets repeat until the next subPreset is triggered. The point at which a new subPreset is triggered is controlled by the parameter in annotation 4 and the order in which they are triggered by the method selected on the parameter shown in annotation 6. This parameter can be either CHAOTIC or CYCLIC. CHAOTIC moves through the subPresets in a random order (a musical analog to mobile form), while CYCLIC moves through the subPresets from 1 to 8 then back to 1 again until the personality is switched. Both of these modes are useful for different styles of musical personalities.

The coll objects in annotation 7 and 8 hold the data relating to the master parameters. A coll object is a collection of data stored for use later. The coll object in annotation 7 is used to recall the data snapshot and then populate the parameter boxes with this data. The coll object in annotation 8 is used when saving new data snapshots for a new subPreset. This small section of logic was used in my original configuration of the subPresets and could be used again to save new versions. This save and recall method is used throughout GMGEn to create and recall snapshots of data.

Subsection 3

GMGEn is a generative instrument that uses six musical ‘lines’ in combination to create its sound. The multitude of possible relationships between each of the musical lines allows for an abundance of generated musical content. This subsection of the main GMGEn patcher controls the logic governing the low-level features of one of the six lines for the current personality. This logic is repeated for each musical line. The set up of these musical elements is key to creating the musical detail that can be generated by the current personality. These low-level features complement the high-level features (master parameters) that are discussed above in the second subsection. The same save and recall mechanism has been used here to generate music with the same feel.

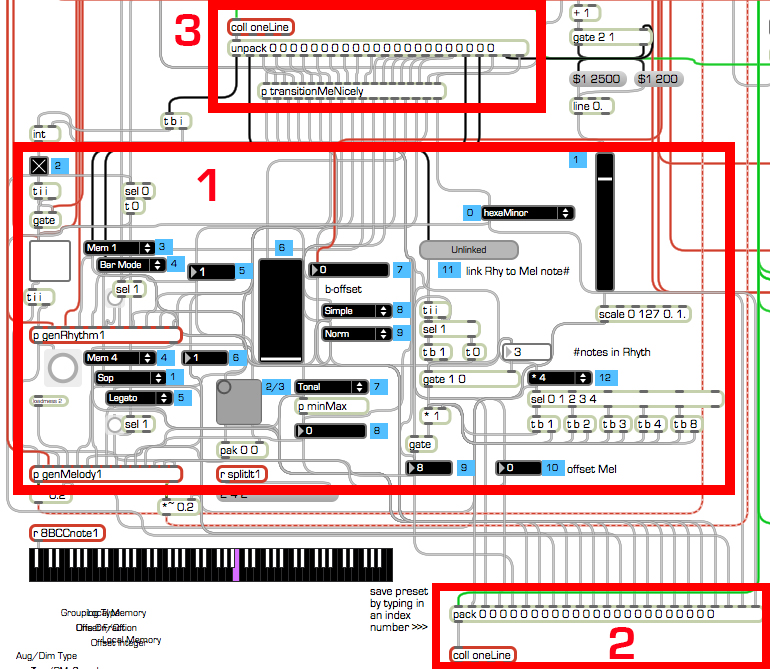

Demonstrations_Application Figure 7 – Subsection 3 of the main subpatcher for GMGEn found in the GMGEn subpatcher in bPatcherGenerativeMusicExamples.maxpat file top-level patcher.

This subsection (See Figure 7) is largely split into two sections shown in annotation 1. One governs control over the rhythmic detail and the other controls melodic detail. There are two subpatchers (genRhythm1 and genMelody1), which take into account these settings and output a list of pitches to the dac to be played. Those governing rhythm include the following: bar mode, offset fraction, offset integer, aug/dim type, aug/dim speed. Those governing melody include the following: timbre, harmonic cloud, range type, envelope type, transposition mode, transposition value, notes looped. There are also some parameters that affect the line more generally, including those governing the mix level or whether the line is switched on or off.

I will now give a brief explanation of the parameters for both rhythm and melody.

Bar Mode has two settings, Bar or Free. The ‘Bar’ setting uses the master value for the smallest note length and number of beats in the bar to calculate a maximum value for the bar. For example: with 100ms as the smallest note length and 8 beats in the bar, this equals a total bar size of 800ms. The ‘bar’ mode setting will now group the randomized rhythms generated inside this 800ms boundary in segments using multiples of 100ms. This is useful when configuring lines for rhythmic texture. Using the same example master variables, the ‘free’ mode setting will still group the generated rhythms into multiples of 100ms but will not necessarily group them rigidly inside the bar construct. This is useful for attaching to lines that will perform a more soloistic role within the subPreset.

Offset fraction and offset integer are settings that apply a delay to the rhythm, pushing the rhythms out (late) by certain amounts. The offset integer setting pushes the rhythm out by integer multiples of the smallest note length in milliseconds. The offset fraction is a slider, which pushes the rhythm out by values between 0 and 1. A value of zero applies no delay to the rhythm. A value of 1 applies a one beat delay to the rhythm. Giving two musical lines the same melodic and rhythmic content (therefore making them play identical material) while applying a slight rhythmic offset to one of the two lines can produce effects such as echo or Reichian phasing.

Aug/dim type and aug/dim speed are used to create augmentations or diminutions of the generated rhythmic values based on the generated rhythmic criteria. Aug/dim type has two settings: compound and simple. These have the effect of changing any augmentation or diminution of the generated rhythmic values into either simple or complex subdivisions or multiples based on the aug/dim speed value. The aug/dim speed has 5 settings Normal, Fast, Slow, Very Fast, and Very Slow. The ‘normal’ setting applies no adjustment to the rhythm. ‘Fast’ and ‘Very Fast’ apply a diminution while ‘Slow’ and ‘Very Slow’ apply augmentations to the generated rhythmic values. This is useful for applying rhythmic interest to the same melodic passages, which may have been used previously, allowing them to be compositionally developed inside a generative system.

On the melodic side of the generative settings the Timbre matrix can mix together different proportions of four signal types to produce a variety of different electronic timbral qualities. This is a 2 dimensional slider that is saved as different values of x and y. This feature is used to create different timbral families to help accentuate a particular role the line may be performing. Coupled with this is the envelope type feature, which has several settings: sustained, legato, staccato and swell. These envelope types add further variety to the timbre matrix.

The Harmonic Cloud feature is explained in detail in the critical writing portion of the thesis. In short, this amounts to different collections of pitches organized together to perform a particular role in the creation of the mood of the personality.

Range type shifts the musical line up or down octaves based on the voice role it will perform. Therefore, there are typical choices between bass, baritone, alto, soprano and hyper soprano. With alto as the neutral position, baritone and bass are shifted down one and two octaves respectively while soprano and hyper soprano are shifted up one and two octaves respectively from the generated midi pitch values.

The transposition mode and transposition value settings govern a shift in pitch of the generated melody line. This can be used to create chordal textures by combining two (or more) lines playing the same material with differing transposition values. The transposition value can be set higher or lower than the unaffected line to create many different chordal combinations. The transposition mode has two settings: real or tonal. Real transposes up or down the number of semitones shown in the transposition, while Tonal transposes the line up or down based on the relative tonal positions setup in the harmonic cloud. This is analogous to the terms used in the analysis of real vs tonal answers to musical phrases in renaissance and baroque polyphonic music.

The notes looped parameter is used to set how far through the generated list of pitches the generator plays. For example, if the generated list contains the values A, B, C and D, the pitches will cycle through in order from pitch 1 (A) to pitch 2 (B) until reaching pitch 4 (D) and then will begin again. Inputting a 3 value into the notes looped parameter will truncate the pitch at index position 4 and will instead loop through A, B, C, A, B, C, A…etc. This is useful for creating rhythmic arpeggiaic lines, oscillating lines, or for creating lines of single repeated notes, which, for example, might be useful for an idiomatic bass guitar-style.

Both annotation 2 and 3 show the save and recall part of the patch logic. The save logic is the same as that described in subsection 2, however, the recall logic has a fundamental difference that allows GMGEn to trigger stylistic transitions between two different personalities. When a new set of parameters is recalled by a personality change the new values are passed through this cascade logic. This logic withholds the new parameter setting for a time before passing it out to the rest of the system. It withholds each new parameter setting for a different amount of time and thus allows some of the settings of the previous personality to merge with that of the new personality. This cascading feature is the mechanism allowing the stylistic transition from one personality to another within GMGEn.